YouTube wants the world to know that it’s doing a better job than ever of enforcing its own moderation rules. The company says that a shrinking number of people see problematic videos on its site — such as videos that contain graphic violence, scams, or hate speech — before they’re taken down.

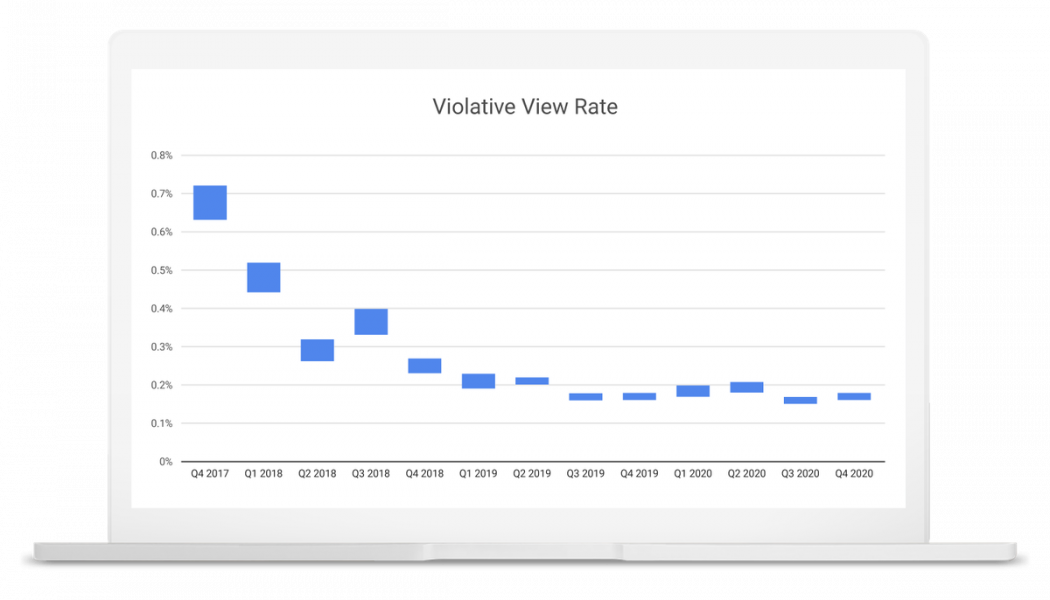

In the final months of 2020, up to 18 out of every 10,000 views on YouTube were on videos that violate the company’s policies and should have been removed before anyone watched them. That’s down from 72 out of every 10,000 views in the fourth quarter of 2017, when YouTube started tracking the figure.

But the numbers come with an important caveat: while they measure how well YouTube is doing at limiting the spread of troubling clips, they’re ultimately based on what videos YouTube believes should be removed from its platform — and the company still allows some obviously troubling videos to stay up.

The stat is a new addition to YouTube’s community guidelines enforcement report, a transparency report updated quarterly with details on the types of videos being removed from the platform. This new figure is called Violative View Rate, or VVR, and tracks how many views on YouTube happen on videos that violate its guidelines and should be taken down.

This figure is essentially a way for YouTube to measure how good it’s doing at moderating its own site, based on its own rules. The higher the VVR, the more problematic videos are spreading before YouTube can catch them; the lower the VVR, the better YouTube is doing at stamping out prohibited content.

YouTube made a chart showing how the figure has fallen since it started measuring the number for internal use:

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/22422381/VVR_Graph_1440x1080.png)

The steep drop from 2017 to 2018 came after YouTube started relying on machine learning to spot problematic videos, rather than relying on users to report problems, Jennifer O’Connor, YouTube’s product director for trust and safety, said during a briefing with reporters. The goal is “getting this number as close to zero as possible.”

Videos that violate YouTube’s advertising guidelines, but not its overall community guidelines, aren’t included in the VVR figure since they don’t warrant removal. And so-called “borderline content” that bumps up against but doesn’t quite violate any rules isn’t factored in either for the same reason.

O’Connor said YouTube’s team uses the figure internally to understand how well they’re doing at keeping users safe from troubling content. If it’s going up, YouTube can try to figure out what types of videos are slipping through and prioritize developing its machine learning to catch them. “The North Star for our team is to keep users safe,” O’Connor said.