Twitter is piloting a new feature that will let users add specific content warnings to individual photos and videos sent out in tweets. The platform noted that the feature would be available to “some” users during the test.

People use Twitter to discuss what’s happening in the world, which sometimes means sharing unsettling or sensitive content. We’re testing an option for some of you to add one-time warnings to photos and videos you Tweet out, to help those who might want the warning. pic.twitter.com/LCUA5QCoOV

— Twitter Safety (@TwitterSafety) December 7, 2021

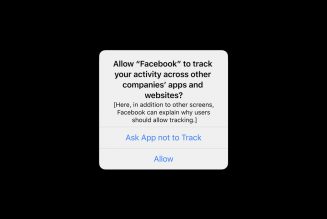

Although Twitter currently has a way to add content warnings to tweets, the only way to do it is to add the warning to all your tweets. In other words, every photo or video you post will have a content warning, regardless of whether it contains sensitive material or not. The new feature it’s testing lets you add the warning to single tweets and apply specific categories to that warning.

As shown in the video that Twitter posted, it appears that when you’re editing an image or video, tapping the flag icon on the bottom right corner of the toolbar lets you add a content warning. The next screen lets you categorize the warning, with choices including “nudity,” “violence,” or “sensitive.” Once you post the tweet, the image or video will appear blurred out, and it’s overlaid with a content warning that explains why you flagged it. Users can click through the warning if they want to view the content.

If you fail to flag content when you post sensitive material, Twitter will — as it has already been doing — rely on user reports to decide whether or not your content should have a warning. In addition to its content warning experiment, Twitter announced yesterday that it’s trying out a “human-first” way to handle the reporting process. Instead of asking the user what rules the tweet is breaking, it will give the user the chance to describe what exactly happened, and based on that response, it will determine a specific violation.