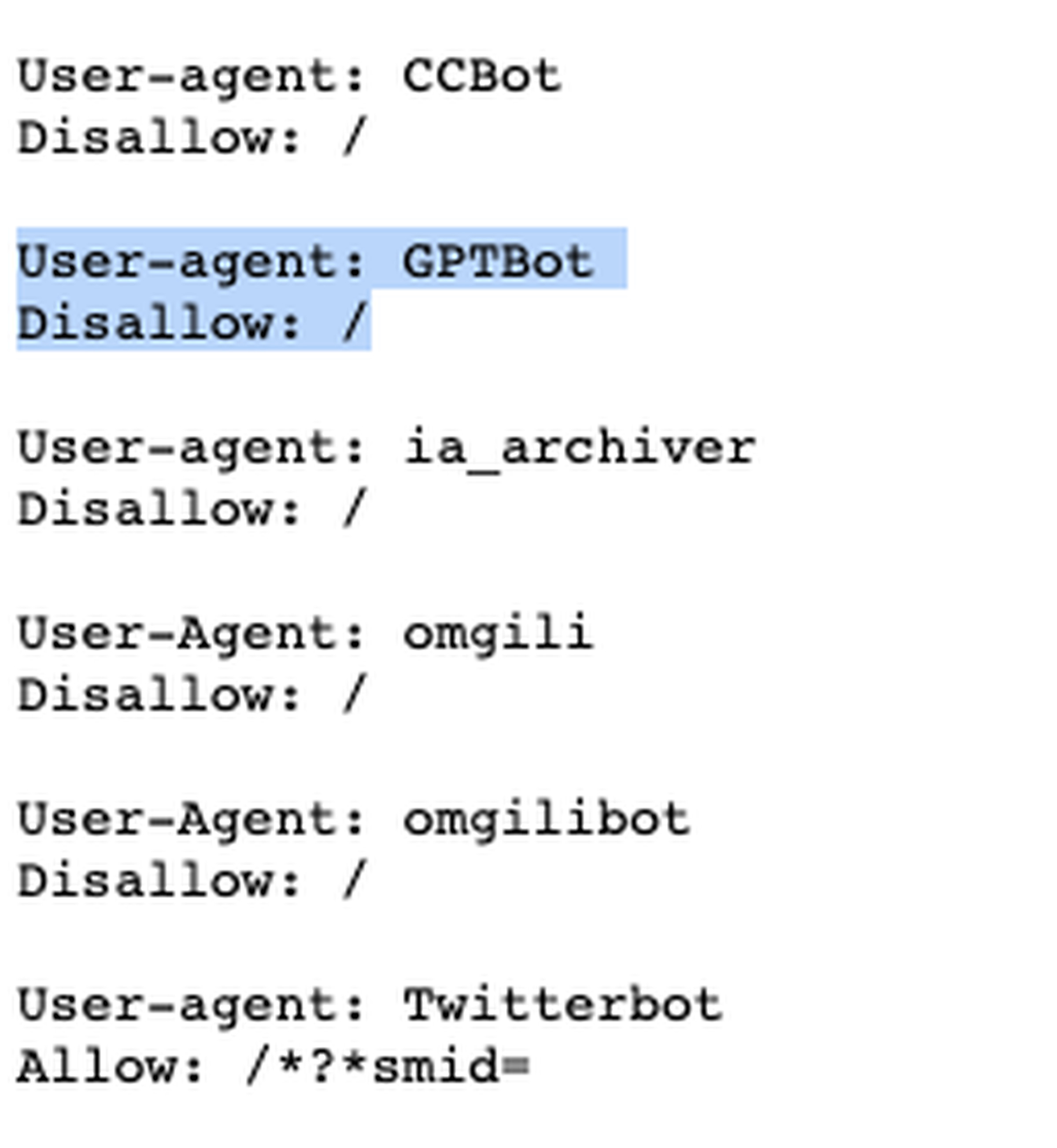

The NYT’s robot.txt page that controls how it appears to automated bots built to index the internet now specifically disallows OpenAI’s GPTBot.

The New York Times has blocked OpenAI’s web crawler, meaning that OpenAI can’t use content from the publication to train its AI models. If you check the NYT’s robots.txt page, you can see that the NYT disallows GPTBot, the crawler that OpenAI introduced earlier this month. Based on the Internet Archive’s Wayback Machine, it appears NYT blocked the crawler as early as August 17th.

The change comes after the NYT updated its terms of service at the beginning of this month to prohibit the use of its content to train AI models. The NYT and OpenAI didn’t immediately reply to a request for comment.

The NYT is also considering legal action against OpenAI for intellectual property rights violations, NPR reported last week. If it did sue, the Times would be joining others like Sarah Silverman and two other authors who sued the company in July over its use of Books3, a dataset used to train ChatGPT that may have thousands of copyrighted works, as well as Matthew Butterick, a programmer and lawyer who alleges the company’s data scraping practices amount to software piracy.