Responsible AI

Why Responsible AI is Built Around Human-Centred Design

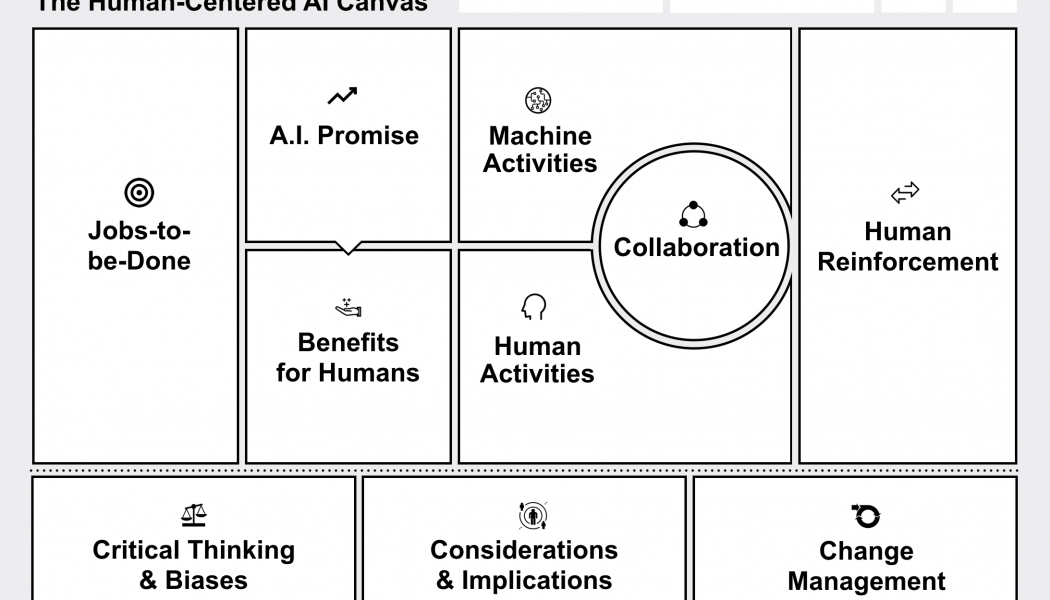

Responsible artificial intelligence (AI) provides a framework for building trust in the AI solutions of an organisation, according to a report from Accenture. It is defined as the practice of designing, developing, and deploying AI with good intention to empower employees and businesses, and fairly impact customers and society. In turn, this allows companies to stimulate trust and scale AI with confidence. With technology starting to become commonplace, more organisations around the world are seeing the need to adopt responsible AI. For example, Microsoft relies on an AI, Ethics, and Effects in Engineering and Research (Aether) Committee to advise its leadership on the challenges and opportunities presented by AI innovations. Some of the elements the committee examines is how fairly AI sys...