Proctorio, a piece of exam surveillance software designed to keep students from cheating while taking tests, relies on open-source software that has a history of racial bias issues, according to a report by Motherboard. The issue was discovered by a student who figured out how the software did facial detection, and discovered that it fails to recognize black faces over half the time.

Proctorio, and other programs like it, is designed to keep an eye on students while they’re taking tests. However, many students of color have reported that they have issues getting the software to see their faces — sometimes having to resort to extreme measures to get the software to recognize them. This could potentially cause the students problems: Proctorio will flag them to instructors if it doesn’t detect their face.

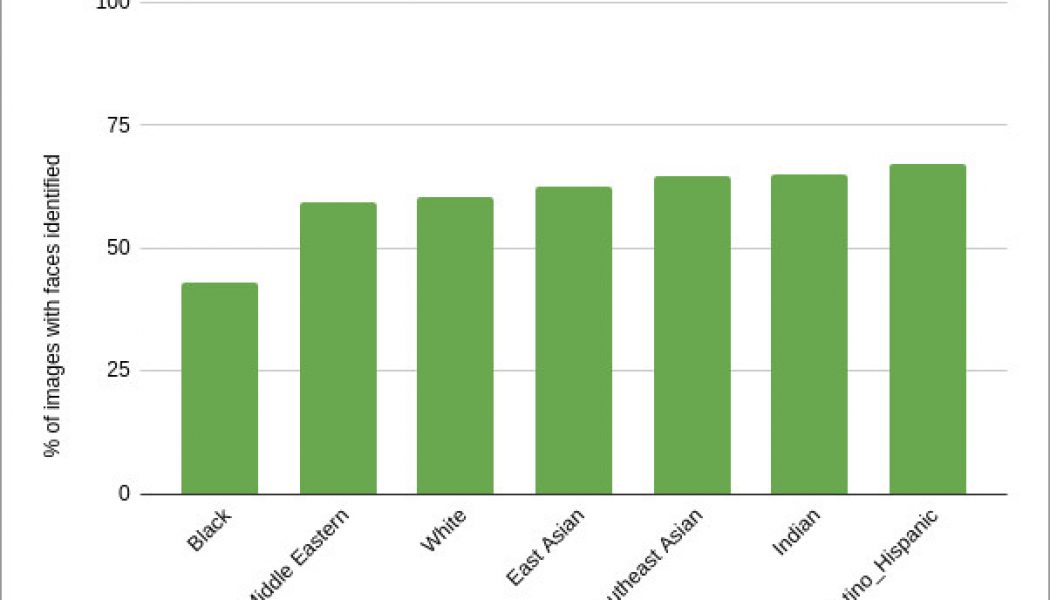

After anecdotally hearing about these issues, Akash Satheesan decided to look into the facial detection methods that the software was using. He discovered that it looked and performed identically to OpenCV, an open-source computer vision program that can be used to recognize faces (which has had issues with racial bias in the past). After learning this, he ran tests using OpenCV and a data set designed to validate how well machine vision algorithms deal with diverse faces. According to his second blog post, the results were not good.

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/22430728/sjuwg8t.png)

Not only did the software fail to recognize black faces more than half the time, it wasn’t particularly good at recognizing faces of any ethnicity — the highest hit rate was under 75 percent. In its report, Motherboard contacted a security researcher, who was able to validate both Satheesan’s results and analysis. Proctorio itself also confirms that it uses OpenCV on its licenses page, though it doesn’t go into detail about how.

In a statement to Motherboard, a Proctorio spokesperson said that Satheesan’s tests prove that the software only looks to detect faces, not recognize the identities associated with them. Well that may be a (small) comfort for students who may rightly be worried about privacy issues related to proctoring software, it doesn’t address the accusations of racial bias at all.

This isn’t the first time Proctorio has been called out for failing to recognize diverse faces: the issues that it caused students of color were cited by one university as a reason why it would not renew its contract with the company. Senator Richard Blumenthal (D-CT) even called out the company when talking about bias in proctoring software.

While racial bias in code is nothing new, it’s especially distressing to see it affecting students who are just trying to do their school work, especially in a year where remote learning is the only option available to some.