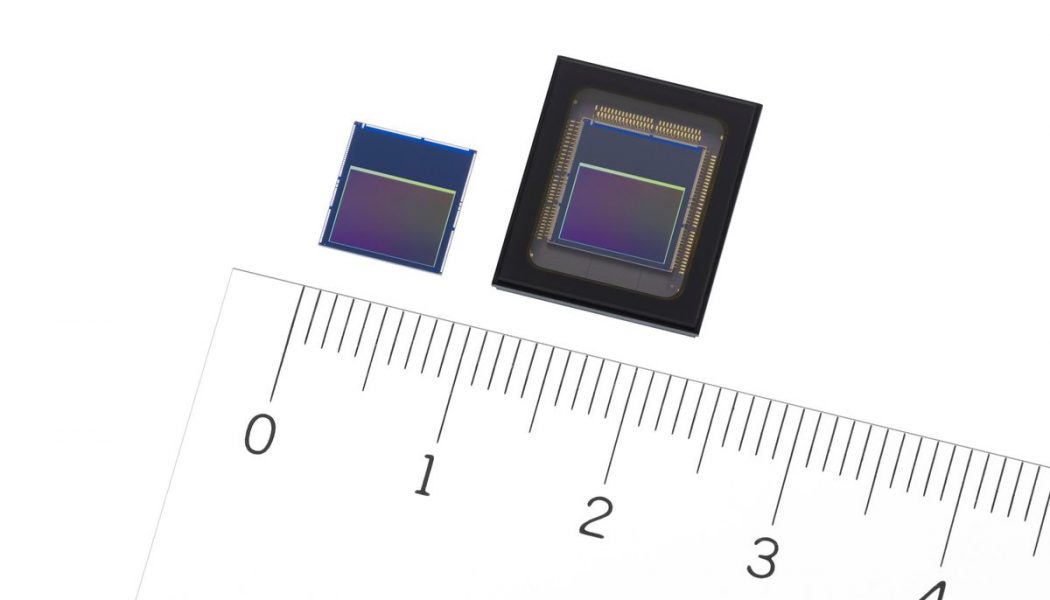

Sony has announced the world’s first image sensor with integrated AI smarts. The new IMX500 sensor incorporates both processing power and memory, allowing it to perform machine learning-powered computer vision tasks without extra hardware. The result, says Sony, will be faster, cheaper, and more secure AI cameras.

Over the past few years, devices ranging from smartphones to surveillance cameras have benefited from the integration of AI. Machine learning can be used to not only improve the quality of the pictures we take, but also understand video like a human would; identifying people and objects in frame. The applications of this technology are huge (and sometimes worrying), enabling everything from self-driving cars to automated surveillance.

But many applications rely on sending images and videos to the cloud to be analyzed. This can be a slow and insecure journey, exposing data to hackers. In other scenarios, manufacturers have to install specialized processing cores on devices to handle the extra computational demand, as with new high-end phones from Apple, Google, and Huawei.

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/19970186/1.jpeg)

But Sony says its new image sensor offers a more streamlined solution than either of these approaches.

“There are some other ways to implement these solutions,” Sony vice president of business and innovation Mark Hanson told The Verge, referencing edge computing, which use dedicated AI chips not attached directly to the image sensor. “But I do not believe they will be anywhere close to as cost effective as us shipping image sensors in the billions.”

Sony’s huge presence in the image processing market will certainly push this technology to clients at a huge scale. Hanson notes that the company has more than 60 percent market share, and shipped about 1.6 billion sensors last year, including for all three cameras in Apple’s iPhone 11 Pro.

This first-generation AI image sensor, though, is unlikely to end up in consumer devices like smartphones and tablets, at least to begin with. Instead, Sony will be targeting retailers and industrial clients, with Hanson referencing Amazon’s cashierless Go stores as a potential application.

In Amazon’s Go stores, the retailer uses scores of AI-enabled cameras to track shoppers and charge them for objects they grab from the shelves. “They put hundreds of cameras, and they’re running petabytes of data, on a daily basis through a small convenience score,” says Hanson. Reports suggest that the resulting hardware costs have slowed the roll-out of these stores. “But if we can miniaturize that capability and put it on the backside of a chip we can do all sorts of interesting things.”

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/19970164/908824086.jpg.jpg)

In addition to cost savings there are privacy benefits. If the AI chip is stuck directly onto the back of the image sensor then object detection can be done on-device. Instead of sending off data to be analyzed, either to the cloud or a nearby processor, the image sensor itself performs whatever AI analysis is necessary and simply produces the metadata instead.

So, if you want to create a smart camera that detects whether or not someone is wearing a mask (a very real concern right now) then an IMX500 image sensor can be loaded with the relevant algorithm which allows the camera to send off quick “yes” or “no” pings.

“Now we’ve eliminated what would normally be a 60 frames per second, 4K video stream to just that one ‘hey, I recognize this object,’” says Hanson. “That can reduce data traffic [and] it also helps things like privacy.”

Another big application is industrial automation, where image sensors are needed to help so-called co-bots — robots designed to work in close proximity to humans — from bashing their flesh-and-blood colleagues. Here the main advantage of an integrated AI image sensor is speed. If a co-bot detects a human where they shouldn’t be and needs to come to a quick stop, then processing that information as quickly as possible is paramount.

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/19970165/977013452.jpg.jpg)

Sony says the IMX500 is much faster for these sorts of tasks than many other AI cameras, with the ability to apply a standard image recognition algorithm (MobileNet V1) to a single video frame in just 3.1 milliseconds. By comparison, says Hanson, competitors’ chips, such as those made by the Intel-owned Movidius (which are used in Google’s Clips camera and DJI’s Phantom 4 drone) can take hundreds of milliseconds — even seconds — to process.

The big bottleneck, though, is the ability of the IMX500 to handle more complex analytical tasks. Right now, says Hanson, the image sensor can only work with pretty “basic” algorithms. That means that more sophisticated and varied tasks, like driving an autonomous car, will certainly require dedicated AI hardware for the foreseeable future. Instead, think of the IMX500 as a simple, single-application device.

But this is only the first generation, and the technology will undoubtedly improve in future. Right now, cameras are smarter because they send their data to computers. In the future, the camera itself will be the computer, and all the smarter for it.

Test samples of the IMX500 have already started shipping to early customers with prices starting at ¥10,000 ($93). Sony expects the first products using the image sensor to arrive in the first quarter of 2021.