More than half of the images on the web are missing alt text, according to Microsoft. Image labels (alternative text) are key for many who are blind or low vision, as they provide screen readers with text to read aloud. Microsoft is now attempting to fill the gap with auto-generated alt text for images on the web.

Existing screen readers currently read out “unlabeled graphic” if there’s no description of an image at all which isn’t very helpful. Microsoft Edge has now been updated to improve the screen reader experience for all of the images on the web without alt text.

“When a screen reader finds an image without a label, that image can be automatically processed by machine learning (ML) algorithms to describe the image in words and capture any text it contains,” explains Travis Leithead, a program manager on Microsoft’s Edge platform team. “The algorithms are not perfect, and the quality of the descriptions will vary, but for users of screen readers, having some description for an image is often better than no context at all.”

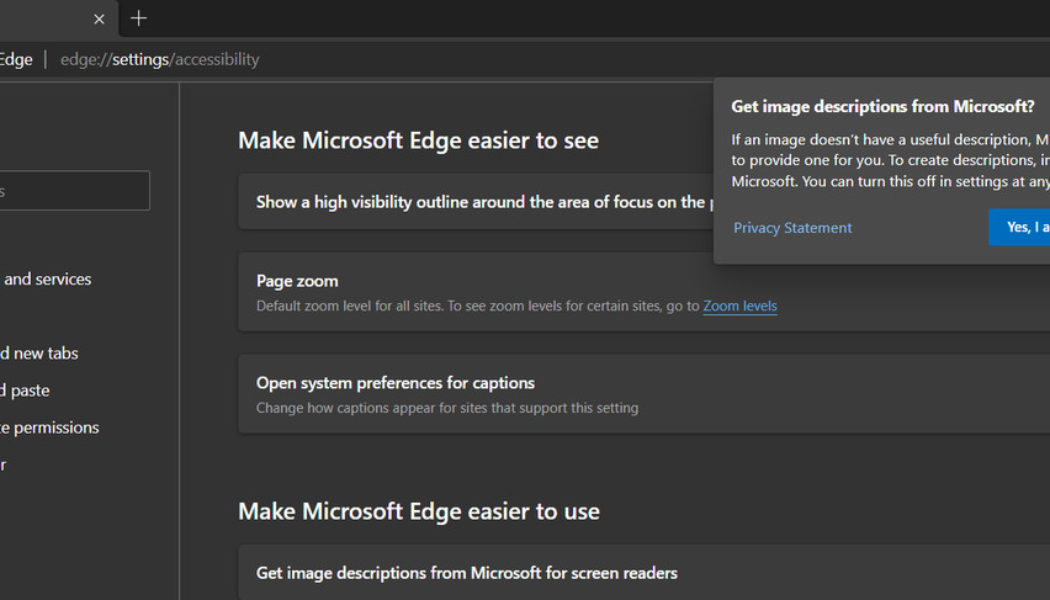

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/23326492/oUdX90O.png)

Microsoft Edge can now send unlabeled images to its Azure computer vision API for processing, which is governed by Microsoft’s privacy promises. The vision API creates alt text in English, Spanish, Japanese, Portuguese, or Chinese Simplified which can then be deciphered by screen readers. Microsoft Edge won’t attempt to add automatic labels to images that are smaller than 50 x 50 pixels, very large image files, images that are marked as decorative, or images that the Vision API categorizes as pornographic, gory, or sexually suggestive.

Microsoft is rolling out this new feature immediately in Microsoft Edge for Windows, Mac, and Linux, but it won’t be available in Edge on Android or iOS yet. You can try the new feature out by enabling “Get image descriptions from Microsoft for screen readers” in edge://settings/accessibility, and using Narrator or another screen reader to browse the web.

“This feature is still new, and we know that we are not done,” says Leithead. “We’ve already found some ways to make this feature even better, such as when images do have a label, but that label is not very helpful. Continuous image recognition and algorithm improvements will also refine the quality of the service.”