The AI search tools are getting better — but they don’t yet understand what a search engine really is and how we really use them.

Share this story

AI is coming for the search business. Or so we’re told. As Google seems to keep getting worse, and tools like ChatGPT, Google Gemini, and Microsoft Copilot seem to keep getting better, we appear to be barreling toward a new way to find and consume information online. Companies like Perplexity and You.com are pitching themselves as next-gen search products, and even Google and Bing are making huge bets that AI is the future of search. Bye bye, 10 blue links; hello direct answers to all my weird questions about the world.

But the thing you have to understand about a search engine is that a search engine is many things. For all the people using Google to find important and hard-to-access scientific information, orders of magnitude more are using it to find their email inbox, get to Walmart’s website, or remember who was president before Hoover. And then there’s my favorite fact of all: that a vast number of people every year go to Google and type “google” into the search box. We mostly talk about Google as a research tool, but in reality, it’s asked to do anything and everything you can think of, billions of times a day.

The real question in front of all these would-be Google killers, then, is not how well they can find information. It’s how well they can do everything Google does. So I decided to put some of the best new AI products to the real test: I grabbed the latest list of most-Googled queries and questions according to the SEO research firm Ahrefs and plugged them into various AI tools. In some instances, I found that these language model-based bots are genuinely more useful than a page of Google results. But in most cases, I discovered exactly how hard it will be for anything — AI or otherwise — to replace Google at the center of the web.

People who work in search always say there are basically three types of queries. First and most popular is navigation, which is just people typing the name of a website to get to that website. Virtually all of the top queries on Google, from “youtube” to “wordle” to “yahoo mail,” are navigation queries. In actual reality, this is a search engine’s primary job: to get you to a website.

For navigational queries, AI search engines are universally worse than Google. When you do a navigational Google search, it’s exceedingly rare that the first result isn’t the one you’re looking for — sure, it’s odd to show you all those results when what Google should actually do is just take you directly to amazon.com or whatever, but it’s fast and it’s rarely wrong. The AI bots, on the other hand, like to think for a few seconds and then provide a bunch of quasi-useful information about the company when all I want is a link. Some didn’t even link to amazon.com.

I don’t hate the additional information so much as I hate how long these AI tools take to get me what I need. Waiting 10 seconds for three paragraphs of generated text about Home Depot is not the answer; I just want a link to Home Depot. Google wins that race every time.

The next most popular kind of search is the information query: you want to know something specific, about which there is a single right answer. “NFL scores” is a hugely popular information query; “what time is it” is another one; so is “weather.” It doesn’t matter who tells you the score or the time or the temperature, it’s just a thing you need to know.

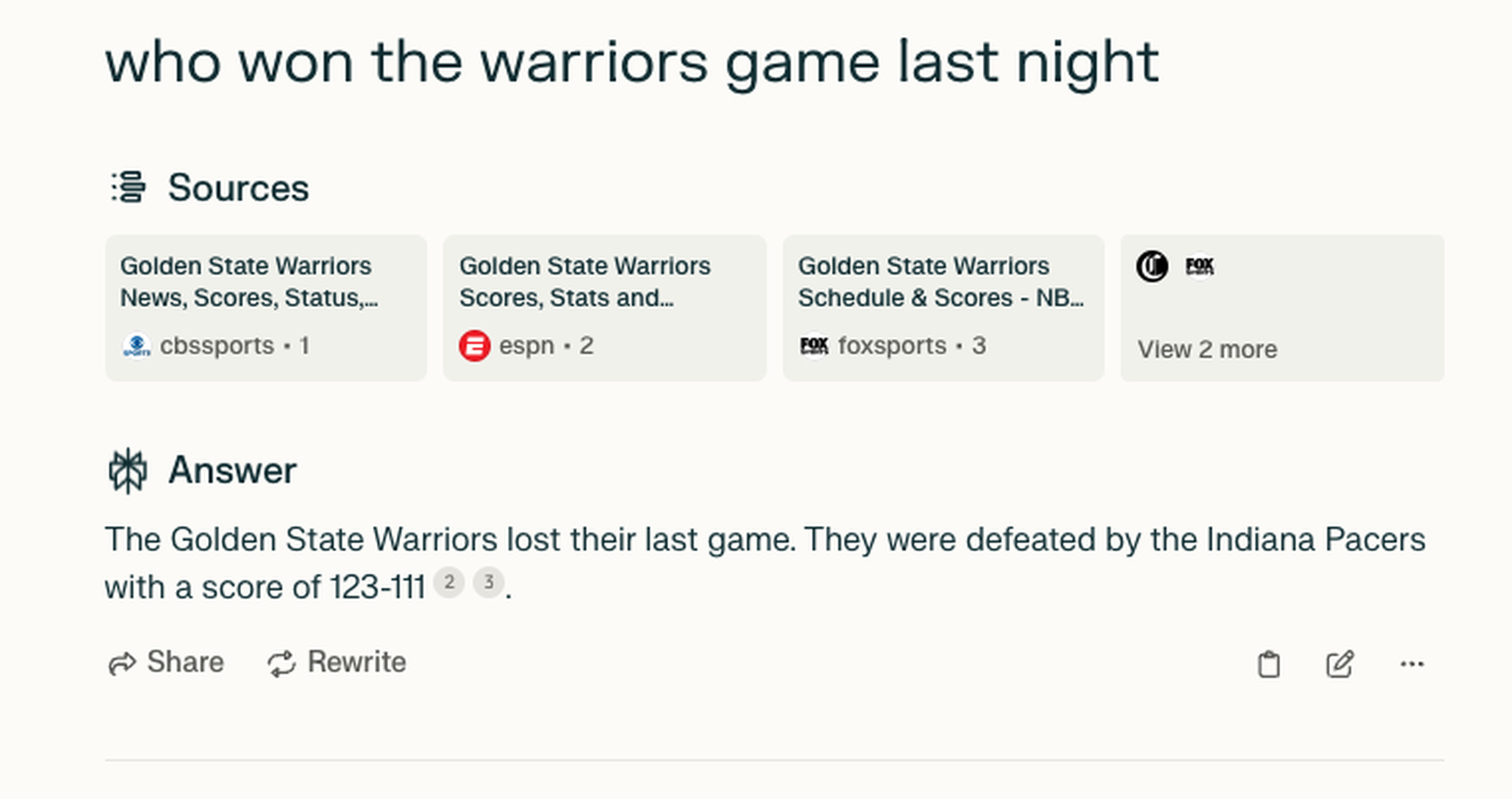

Here, the results are all over the map. For real-time stuff like sports scores, the AI is not to be trusted: You.com and Perplexity both frequently gave me outdated information, though Copilot usually got it right. Google not only gets it right but usually pops up a widget with other stats and information, which is better than the others. Ditto anything requiring your specific location or context — Google probably has that information about you, but the AI bots mostly don’t.

When it comes to more evergreen information like “how many weeks in a year” or “when is mother’s day,” everything I tested got it right. In many cases I actually preferred the AI answers, which add a bit of helpful context. But I’m not sure how often I can trust them. Google told me there are 52.1429 weeks in a year, but You.com explained that actually it’s 52 weeks and a day, plus an added day on leap years. That’s more useful than just 52.1429! But then Perplexity told me that actually, a common year is 52 weeks, and a leap year is 52 weeks and a day — before directly contradicting itself two sentences later. Here’s the whole answer; just try to make sense of it:

A common year has approximately 52 weeks, while a leap year has 52 weeks and 1 day. In more precise terms, a regular year actually consists of 52.143 weeks, which means there is one additional day in a normal year. On the other hand, a leap year, occurring every four years except for certain exceptions, has 52 weeks and 2 days. This difference in the number of weeks is due to the extra day in a common year and the additional day in February during a leap year.

After doing some more research, I am now confident that the answer is what You.com said. But this all took too long, and forcing me to fact-check my searches kind of defeats the purpose of helpfully summarizing things for me. Google continues to win here on one thing and one thing alone: speed.

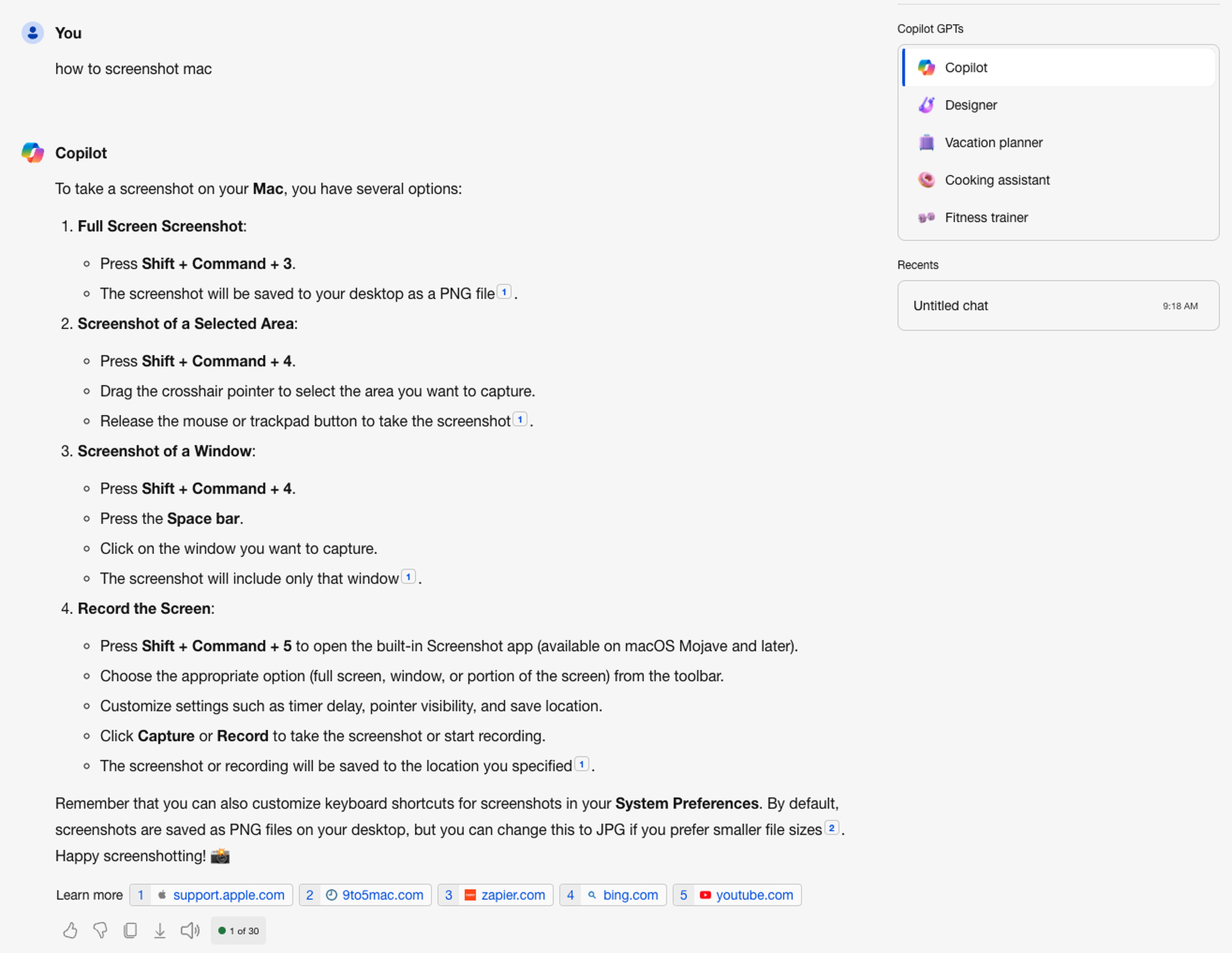

There is one sub-genre of information queries in which the exact opposite is true, though. I call them Buried Information Queries. The best example I can offer is the very popular query, “how to screenshot on mac.” There are a million pages on the internet that contain the answer — it’s just Cmd-Shift-3 to take the whole screen or Cmd-Shift-4 to capture a selection, there, you’re welcome — but that information is usually buried under a lot of ads and SEO crap. All the AI tools I tried, including Google’s own Search Generative Experience, just snatch that information out and give it to you directly. This is great!

Are there complicated questions inherent in that, which threaten the business model and structure of the web? Yep! But as a pure searching experience, it’s vastly better. I’ve had similar results asking about ingredient substitutions, coffee ratios, headphone waterproofing ratings, and any other information that is easy to know and yet often too hard to find.

This brings me to the third kind of Google search: the exploration query. These are questions that don’t have a single answer, that are instead the beginning of a learning process. On the most popular list, things like “how to tie a tie,” “why were chainsaws invented,” and “what is tiktok” count as explorational queries. If you ever Googled the name of a musician you just heard about, or have looked up things like “stuff to do in Helena Montana” or “NASA history,” you’re exploring. These are not, according to the rankings, the primary things people use Google for. But these are the moments AI search engines can shine.

Like, wait: why were chainsaws invented? Copilot gave me a multipart answer about their medical origins, before describing their technological evolution and eventual adoption by lumberjacks. It also gave me eight pretty useful links to read more. Perplexity gave me a much shorter answer, but also included a few cool images of old chainsaws and a link to a YouTube explainer on the subject. Google’s results included a lot of the same links, but did none of the synthesizing for me. Even its generative search only gave me the very basics.

My favorite thing about the AI engines is the citations. Perplexity, You.com, and others are slowly getting better at linking to their sources, often inline, which means that if I come across a particular fact that piques my interest, I can go straight to the source from there. They don’t always offer enough sources, or put them in the right places, but this is a good and helpful trend.

One experience I had while doing these tests was actually the most eye-opening of all. The single most-searched question on Google is a simple one: “what to watch.” Google has a whole specific page design for this, with rows of posters featuring “Top picks” like Dune: Part Two and Imaginary; “For you” which for me included Deadpool and Halt and Catch Fire; and then popular titles and genre-sorted options. None of the AI search engines did as well: Copilot listed five popular movies; Perplexity offered a random-seeming smattering of options from Girls5eva to Manhunt to Shogun; You.com gave me a bunch of out of date information and recommended I watch “the 14 best Netflix original movies” without telling me what they are.

In this case, AI is the right idea — I don’t want a bunch of links, I want an answer to my question — but a chatbot is the wrong interface. For that matter, so is a page of search results! Google, obviously aware that this is the most-asked question on the platform, has been able to design something that works much better.

In a way, that’s a perfect summary of the state of things. At least for some web searches, generative AI could be a better tool than the search tech of decades past. But modern search engines aren’t just pages of links. They’re more like miniature operating systems. They can answer questions directly, they have calculators and converters and flight pickers and all kinds of other tools built right in, they can get you where you’re going with just a click or two. The goal of most search queries, according to these charts, is not to start a journey of information wonder and discovery. The goal is to get a link or an answer, and then get out. Right now, these LLM-based systems are just too slow to compete.

The big question, I think, is less about tech and more about product. Everyone, including Google, believes that AI can help search engines understand questions and process information better. That’s a given in the industry at this point. But can Google reinvent its results pages, its business model, and the way it presents and summarizes and surfaces information, faster than the AI companies can turn their chatbots into more complex, more multifaceted tools? Ten blue links isn’t the answer for search, but neither is an all-purpose text box. Search is everything, and everything is search. It’s going to take a lot more than a chatbot to kill Google.