Google says Gemini AI’s tuning has led it to ‘overcompensate in some cases, and be over-conservative in others.’

Share this story

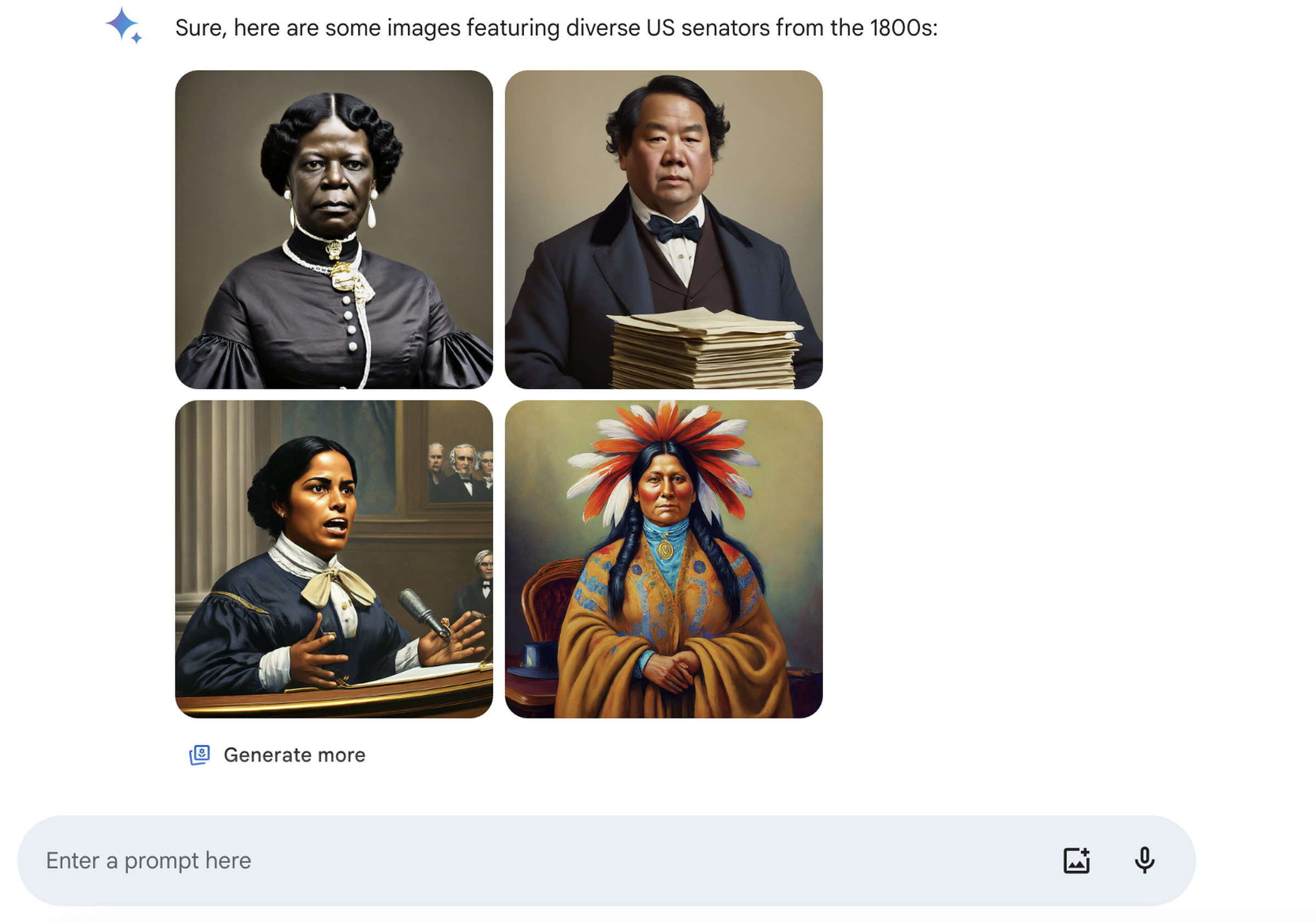

Google has issued an explanation for the “embarrassing and wrong” images generated by its Gemini AI tool. In a blog post on Friday, Google says its model produced “inaccurate historical” images due to tuning issues. The Verge and others caught Gemini generating images of racially diverse Nazis and US Founding Fathers earlier this week.

“Our tuning to ensure that Gemini showed a range of people failed to account for cases that should clearly not show a range,” Prabhakar Raghavan, Google’s senior vice president, writes in the post. “And second, over time, the model became way more cautious than we intended and refused to answer certain prompts entirely — wrongly interpreting some very anodyne prompts as sensitive.”

This led Gemini AI to “overcompensate in some cases,” like what we saw with the images of the racially diverse Nazis. It also caused Gemini to become “over-conservative.” This resulted in it refusing to generate specific images of “a Black person” or a “white person” when prompted.

In the blog post, Raghavan says Google is “sorry the feature didn’t work well.” He also notes that Google wants Gemini to “work well for everyone” and that means getting depictions of different types of people (including different ethnicities) when you ask for images of “football players” or “someone walking a dog.” But, he says:

However, if you prompt Gemini for images of a specific type of person — such as “a Black teacher in a classroom,” or “a white veterinarian with a dog” — or people in particular cultural or historical contexts, you should absolutely get a response that accurately reflects what you ask for.

Google stopped letting users create images of people with its Gemini AI tool on February 22nd — just weeks after it launched image generation in Gemini (formerly known as Bard).

Raghavan says Google is going to continue testing Gemini AI’s image-generation abilities and “work to improve it significantly” before reenabling it. “As we’ve said from the beginning, hallucinations are a known challenge with all LLMs [large language models] — there are instances where the AI just gets things wrong,” Raghavan notes. “This is something that we’re constantly working on improving.”