Facebook will send notifications directly to users who like, share, or comment on COVID-19 posts that violate the company’s terms of service, according to a report from Fast Company.

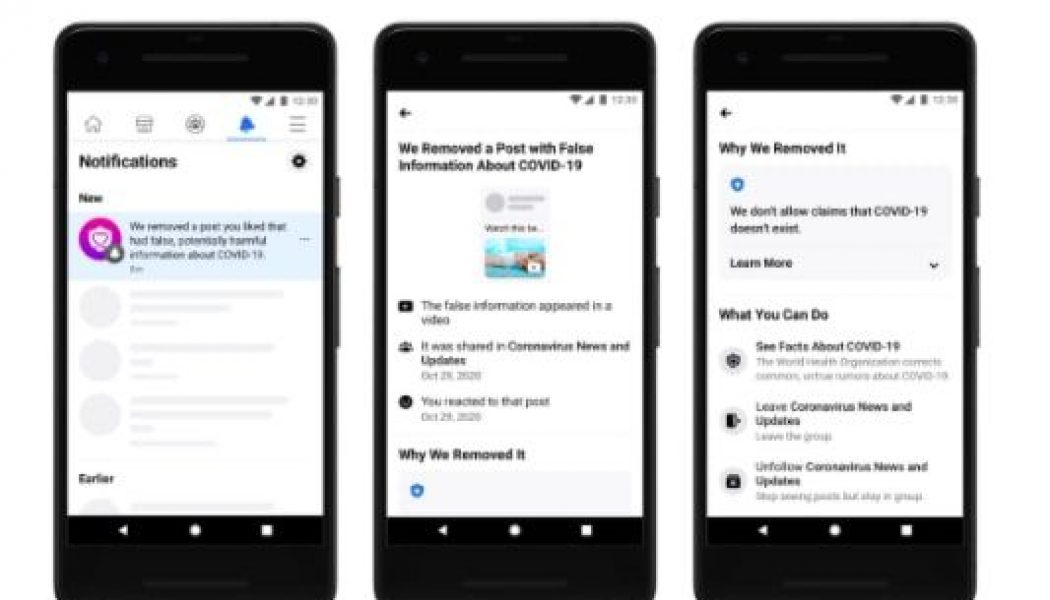

This new feature works like this: if a user interacts with a post that’s later removed, Facebook sends a notification to the user telling them that the post was taken down. If the user clicks the notification, they’ll be taken to a landing page with a screenshot of the post and a short explanation for why it was removed. The landing page will also feature links to COVID-19 educational resources and actions, like unfollowing the group that posted it.

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/22173259/Screen_Shot_2020_12_15_at_3.16.44_PM.jpg)

This is an expansion of Facebook’s previous attempts to combat misinformation. Before this, the company displayed a banner on the news feed, urging users who had engaged with content that had been removed, to “Help Friends and Family Avoid False Information About Covid-19.” But users were often confused at what the banner was referring to, a Facebook product manager told Fast Company. The hope is that new approach is more direct than the banner, while still avoiding scolding users or re-exposing them to misinformation.

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/19902115/InLine_01.jpg)

Facebook’s modified approach is arriving almost a year into the pandemic — a little late. The notifications don’t debunk claims in removed posts. They also don’t apply to posts that later have fact-checking labels put on them, Fast Company writes. That means less-dangerous misinformation still has the opportunity to spread.

Facebook has been slow to act on misinformation that the company doesn’t consider dangerous. Though conspiracy theories about COVID-19 vaccines have spread for months, Facebook only began removing COVID-19 vaccine misinformation in December. The question now is: is this too little, and too late?