Cloning your voice using artificial intelligence is simultaneously tedious and simple: hallmarks of a technology that’s just about mature and ready to go public.

All you need to do is talk into a microphone for 30 minutes or so, reading a script as carefully as you can (in my case: the voiceover from a David Attenborough documentary). After starting and stopping dozens of times to re-record your flubs and mumbles, you’ll send off the resulting audio files to be processed and, in a few hours’ time, be told that a copy of your voice is ready and waiting. Then, you can type anything you want into a chatbox, and your AI clone will say it back to you, with the resulting audio realistic to fool even friends and family — at least for a few moments. The fact that such a service even exists may be news to many, and I don’t believe we’ve begun to fully consider the impact easy access to this technology will have.

The work of speech synthesis has improved massively in recent years, thanks to advances in machine learning. Previously, the most realistic synthetic voices were created by recording audio of a human voice actor, cutting up their speech into component sounds, and splicing these back together like letters in a ransom note to form new words. Now, neural networks can be trained on unsorted data of their target voice to generate raw audio of someone speaking from scratch. The end results are faster, easier, and more realistic to boot. The quality is definitely not perfect when rolling straight out the machine (though manual tweaking can improve this), but they’re only going to get better in the near future.

There’s no special sauce to making these clones, which means dozens of startups are already offering similar services. Just Google “AI voice synthesis” or “AI voice deepfakes,” and you’ll see how commonplace the technology is, available from specialist shops that only focus on speech synthesis, like Resemble.AI and Respeecher, and also integrated into companies with larger platforms, like Veritone (where the tech is part of its advertising repertoire) and Descript (which uses it in the software it makes for editing podcasts).

These voice clones have simply been a novelty in the past, appearing as one-off fakes like this Joe Rogan fake, but they’re beginning to be used in serious projects. In July, a documentary about chef Anthony Bourdain stirred controversy when the creators revealed they’d used AI to create audio of Bourdain “speaking” lines he’d written in a letter. (Notably, few people noticed the deepfake until the creators revealed its existence.) And in August, the startup Sonantic announced it had created an AI voice clone of actor Val Kilmer, whose own voice was damaged in 2014 after he underwent a tracheotomy as part of his treatment for throat cancer. These examples also frame some of the social and ethical dimensions of this technology. The Bourdain use case was decried as exploitative by many (particularly as its use was not disclosed in the film), while the Kilmer work has been generally lauded, with the technology praised for delivering what other solutions could not.

Celebrity applications of voice clones are likely to be the most prominent in the next few years, with companies hoping the famous will want to boost their income with minimal effort by cloning and renting out their voices. One company, Veritone, launched just such a service earlier this year, saying it would let influencers, athletes, and actors license their AI voice for things like endorsements and radio idents, without ever having to go into a studio. “We’re really excited about what that means for a host of different industries because the hardest part about someone’s voice and being able to use it and being able to expand upon that is the individual’s time,” Sean King, executive vice president at Veritone One, told The Vergecast. “A person becomes the limiting factor in what we’re doing.”

Such applications are not yet widespread (or if they are, they’re not widely talked about), but it seems like an obvious way for celebrities to make money. Bruce Willis, for example, has already licensed his image to be used as a visual deepfake in mobile phone ads in Russia. The deal allows him to make money without ever leaving the house, while the advertising company gets an infinitely malleable actor (and, notably, a much younger version of Willis, straight out of his Die Hard days). These sorts of visual and audio clones could accelerate the scales of economy for celebrity work, allowing them to capitalize on their fame — as long as they’re happy renting out a simulacrum of themselves.

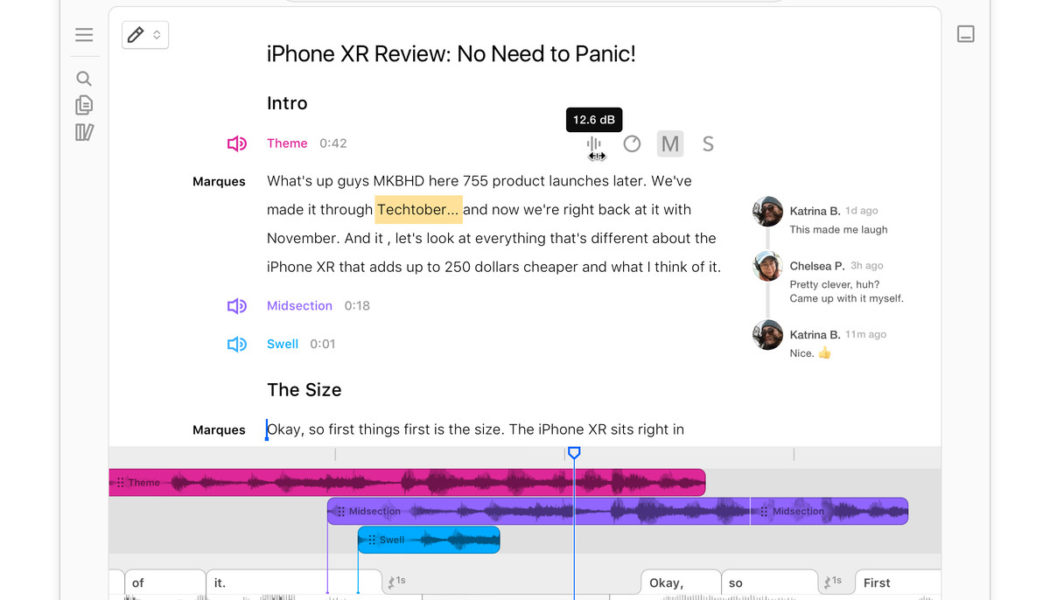

In the here and now, voice synthesis technology is already being built into tools like the eponymous podcast editing software built by US firm Descript. The company’s “Overdub” feature lets a podcaster create an AI clone of their voice so producers can make quick changes to their audio, supplementing the program’s transcription-based editing. As Descript CEO Andrew Mason told The Vergecast: “You can not only delete words in Descript and have it delete the audio, you can type words and it will generate audio in your voice.”

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/19210297/descript_2.png)

When I tried Descript’s Overdub feature myself, it was certainly easy enough to use — though, as mentioned above, recording the training data was a bit of a chore. (It was much easier for my colleague and regular Verge podcast host Ashley Carman, who had lots of pre-recorded audio ready to send the AI.) The voice clones made by Overdub are not flawless, certainly. They have an odd warble to their tone and lack the ability to really charge lines with emotion and emphasis, but they’re also unmistakably you. The first time I used my voice clone was a genuinely uncanny moment. I had no idea that this deeply personal thing — my voice — could be copied by technology so quickly and easily. It felt like a meeting with the future but was also strangely familiar. After all, life is already full of digital mirrors — of avatars and social media feeds that are supposed to embody “you” in various forms — so why not add a speaking automaton to the mix?

The initial shock of hearing a voice clone of yourself doesn’t mean human voices are redundant, though. Far from it. You can certainly improve on the quality of voice deepfakes with a little manual editing, but in their automated form, they still can’t deliver anywhere near the range of inflection and intonation you get from professionals. As voice artist and narrator Andia Winslow told The Vergecast, while AI voices might be useful for rote voice work — for internal messaging systems, automated public announcements, and the like — they can’t compete with humans in many use cases. “For big stuff, things that need breath and life, it’s not going to go that way because, partly, these brands like working with the celebrities they hire, for example,” said Winslow.

But what does this technology mean for the general public? For those of us who aren’t famous enough to benefit from the technology and are not professionally threatened by its development? Well, the potential applications are varied. It’s not hard to imagine a video game where the character creation screen includes an option to create a voice clone, so it sounds like the player is speaking all of the dialogue in the game. Or there might be an app for parents that allows them to copy their voice so that they can read bedtime stories to their children even when they’re not around. Such applications could be done with today’s technology, though the middling quality of quick clones would make them a hard sell.

There are also potential dangers. Fraudsters have already used voice clones to trick companies into moving money into their accounts, and other malicious uses are certainly lurking just beyond the horizon. Imagine, for example, a high school student surreptitiously recording a classmate to create a voice clone of them, then faking audio of that person bad-mouthing a teacher to get them in trouble. If the uses of visual deepfakes are anything to go by, where worries about political misinformation have proven largely misplaced but the technology has done huge damage creating nonconsensual pornography, it’s these sorts of incidents that pose the biggest threats.

One thing’s for sure, though: in the future, anyone will be able to create an AI voice clone of themselves if they want to. But the script this chorus of digital voices will follow has yet to be written.