DeepMind has created an AI system named AlphaCode that it says “writes computer programs at a competitive level.” The Alphabet subsidiary tested its system against coding challenges used in human competitions and found that its program achieved an “estimated rank” placing it within the top 54 percent of human coders. The result is a significant step forward for autonomous coding, says DeepMind, though AlphaCode’s skills are not necessarily representative of the sort of programming tasks faced by the average coder.

Oriol Vinyals, principal research scientist at DeepMind, told The Verge over email that the research was still in the early stages but that the results brought the company closer to creating a flexible problem-solving AI — a program that can autonomously tackle coding challenges that are currently the domain of humans only. “In the longer-term, we’re excited by [AlphaCode’s] potential for helping programmers and non-programmers write code, improving productivity or creating new ways of making software,” said Vinyals.

AlphaCode was tested against challenges curated by Codeforces, a competitive coding platform that shares weekly problems and issues rankings for coders similar to the Elo rating system used in chess. These challenges are different from the sort of tasks a coder might face while making, say, a commercial app. They’re more self-contained and require a wider knowledge of both algorithms and theoretical concepts in computer science. Think of them as very specialized puzzles that combine logic, maths, and coding expertise.

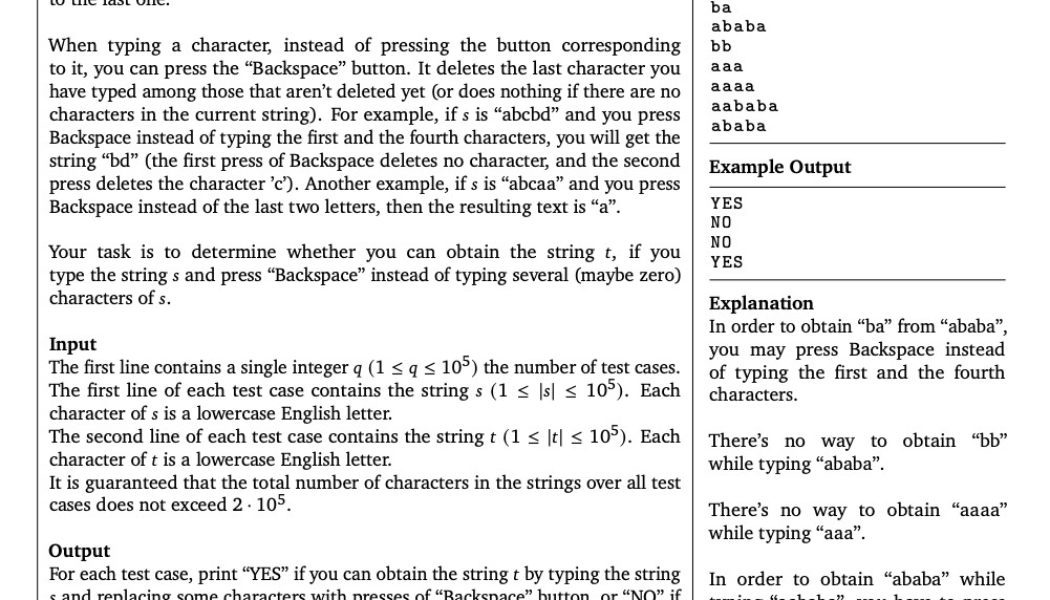

In one example challenge that AlphaCode was tested on, competitors are asked to find a way to convert one string of random, repeated s and t letters into another string of the same letters using a limited set of inputs. Competitors cannot, for example, just type new letters but instead have to use a “backspace” command that deletes several letters in the original string. You can read a full description of the challenge below:

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/23212584/Screenshot_2022_02_02_at_14.30.00.png)

Ten of these challenges were fed into AlphaCode in exactly the same format they’re given to humans. AlphaCode then generated a larger number of possible answers and winnowed these down by running the code and checking the output just as a human competitor might. “The whole process is automatic, without human selection of the best samples,” Yujia Li and David Choi, co-leads of the AlphaCode paper, told The Verge over email.

AlphaCode was tested on 10 of challenges that had been tackled by 5,000 users on the Codeforces site. On average, it ranked within the top 54.3 percent of responses, and DeepMind estimates that this gives the system a Codeforces Elo of 1238, which places it within the top 28 percent of users who have competed on the site in the last six months.

“I can safely say the results of AlphaCode exceeded my expectations,” Codeforces founder Mike Mirzayanov said in a statement shared by DeepMind. “I was sceptical [sic] because even in simple competitive problems it is often required not only to implement the algorithm, but also (and this is the most difficult part) to invent it. AlphaCode managed to perform at the level of a promising new competitor.”

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/23212711/Fig_04_Final.jpg)

DeepMind notes that AlphaCode’s current skill set is only currently applicable within the domain of competitive programming but that its abilities open the door to creating future tools that make programming more accessible and one day fully automated.

Many other companies are working on similar applications. For example, Microsoft and the AI lab OpenAI have adapted the latter’s language-generating program GPT-3 to function as an autocomplete program that finishes strings of code. (Like GPT-3, AlphaCode is also based on an AI architecture known as a transformer, which is particularly adept at parsing sequential text, both natural language and code). For the end user, these systems work just like Gmails’ Smart Compose feature — suggesting ways to finish whatever you’re writing.

A lot of progress has been made developing AI coding systems in recent years, but these systems are far from ready to just take over the work of human programmers. The code they produce is often buggy, and because the systems are usually trained on libraries of public code, they sometimes reproduce material that is copyrighted.

In one study of an AI programming tool named Copilot developed by code repository GitHub, researchers found that around 40 percent of its output contained security vulnerabilities. Security analysts have even suggested that bad actors could intentionally write and share code with hidden backdoors online, which then might be used to train AI programs that would insert these errors into future programs.

Challenges like these mean that AI coding systems will likely be integrated slowly into the work of programmers — starting as assistants whose suggestions are treated with suspicion before they are trusted to carry out work on their own. In other words: they have an apprenticeship to carry out. But so far, these programs are learning fast.