Apple has previewed a lineup of new software features designed to improve cognitive, vision, hearing and mobility accessibility, as well as those for users who are non-speaking or have limited speech capacity. Announcing the features, the company shared that it worked on developing the tools in collaboration with community groups to meet needs across the disability spectrum.

“At Apple, we’ve always believed that the best technology is technology built for everyone,” CEO Tim Cook said. “Today, we’re excited to share incredible new features that build on our long history of making technology accessible, so that everyone has the opportunity to create, communicate, and do what they love.”

Among the features is Live Speech, which allows users to type what they want to say to have it spoken out loud during phone calls or FaceTimes. They can also save phrases they use frequently to have them on hand during calls.

Adding on to the tool is the new Personal Voice, a synthesized voice geared towards those who may be losing their ability to speak or unable to speak for extended periods of time, such as those with an ALS diagnosis.

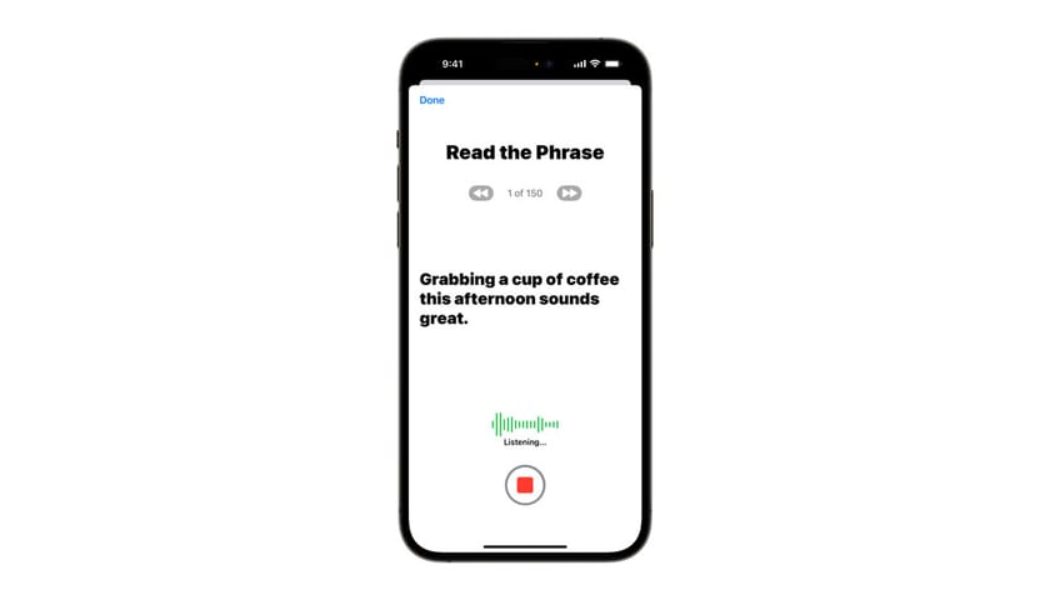

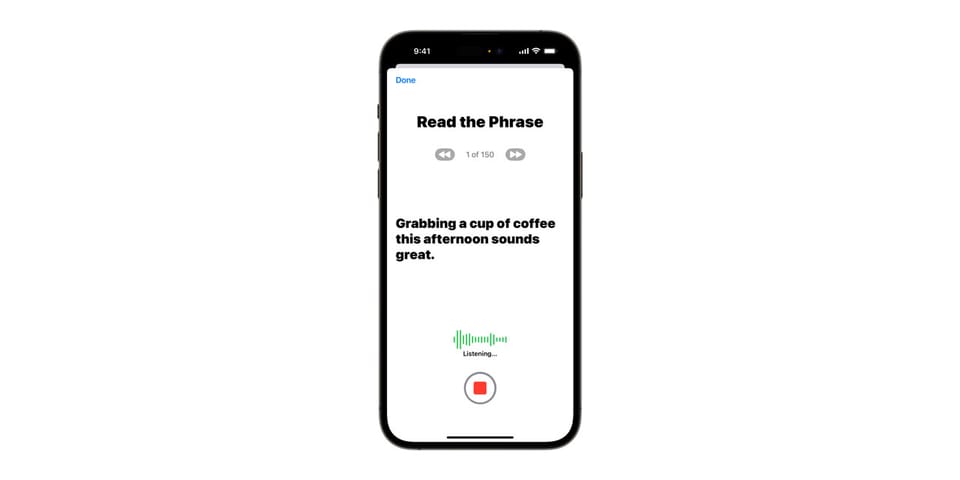

Users will be asked to read Personal Voice a randomized set of text prompts for about 15 minutes of training. A synthetic version of their voice will be created using AI. Live Speech can then read out loud text messages in a user’s own voice.

While the feature may be daunting to some who worry about the use of their voice, Apple stresses that users’ voices are saved on-device and their information is kept private and secure.

In other tech news, Canon’s new Powershot V10 is made for vlogging.