The past week has seen the US government thrown into crisis after an unprecedented attack on the Capitol by a pro-Trump mob — all part of a messy attempt to overturn the election, egged on by President Donald Trump himself. Yesterday, the House of Representatives introduced an article of impeachment against Trump for incitement of insurrection. This is a turning point for the American experiment.

Part of that turning point is the role of internet platform companies.

My guest today is Professor Daphne Keller, director of the Program on Platform Regulation at Stanford’s Cyber Policy Center, and we’re talking about a big problem: how to moderate what happens on the internet.

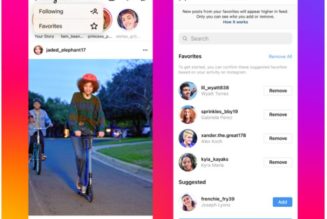

In the aftermath of the attack on the Capitol, both Twitter and Facebook banned Trump, as did a host of smaller platforms. Other platforms like Stripe stopped processing donations to his website. Reddit banned the Donald Trump subreddit — the list goes on. And a smaller competitor to Twitter was effectively pushed off the internet, as Apple and Google removed it from their app stores, and Amazon kicked it off Amazon Web Services.

All of these actions were taken under dire circumstances — an attempted coup from a sitting president that left six people dead. But they are all part of a larger debate about content moderation across the internet that’s been heating up for over a year now, a debate that is extremely complicated and much more sophisticated than any of the people yelling about free speech and the First Amendment really give credit to.

Professor Keller has been on many sides of the content moderation system: before coming to Stanford, she was an associate general counsel at Google, where she was responsible for takedown requests relating to search results. She’s also published work on the messy interaction between the law and the terms of service agreements when it comes to free expression online. I really wanted her help understanding the frameworks content moderation decisions get made in, what limits these companies, and what other models we might use.

Two notes: you’ll hear Professor Keller talk about “CDA 230” a lot — that’s a reference to Section 230, the law that says platforms aren’t liable for what their users publish. And pay attention to how quickly the conversation turns to competition — the size and scale of the big platform companies are key parts of the moderation debate in ways that surprised even me. Okay, Daphne Keller, director of the Program on Platform Regulation at Stanford.

Here we go.

Below is a lightly edited excerpt from our conversation.

2020 was a big inflection point in the conversation about content moderation. There was an endless amount of debate about Section 230, which is the law that says platforms aren’t liable for what their users post. Trump insisted that it be repealed, which is a bad idea for a variety of reasons. Interestingly to me, Joe Biden’s platform position is also that 230 be repealed, which is unique among Democrats.

That conversation really heated up over the last week: Trump incited a riot at the Capitol and got himself banned from Twitter and Facebook, among other platforms. For example, Stripe, a platform we don’t think about in the context of Twitter or Facebook, stopped processing payments from the Trump campaign website.

Following that, a competitor to Twitter called Parler, which had very lax content moderation rules, became the center of attention. It got itself removed from both the Apple and Google app stores, and Amazon Web Services pulled the plug on its web hosting — effectively, the service was shut down by large tech companies that control distribution points. That’s a lot of different points in the tech stack. One of the things that I’m curious about is, it feels like we had a year of 230 debate, and now, a bunch of other people are showing up and saying, “What should content moderation be?” But there is actually a pretty sophisticated existing framework and debate in industry and in academia. Can you help me understand what the existing frameworks in the debate look like?

I actually think there’s a big gap between the debate in DC, the debate globally, and the debate among experts in academia. DC has been a circus, with lawmakers just making things up and throwing spaghetti at the wall. There were over 20 legislative proposals to change CDA 230 last year, and a lot of them were just theater. By contrast, globally, and especially in Europe, there’s work on a huge legislative package, the Digital Services Act. There’s a lot of attention where I think it should be placed on just the logistics of content moderation. How do you moderate that much speech at once? How do you define rules that even can be imposed on that much speech at once?

The proposals in Europe include things like getting courts involved in deciding what speech is illegal, instead of putting that in the hands of private companies. Having processes so that when users have their speech taken down, they get notified, and they have an opportunity to respond and say if they think they’ve been falsely accused. And then, if what we’re talking about is the platforms’ own power to take things down, the European proposal and some of the US proposals, also involve things like making sure platforms are really as clear as they can be about what their rules are, telling users how the rules have been enforced, and letting users appeal those discretionary takedown decisions. And just trying to make it so that users understand what they’re getting, and ideally so that there is also enough competition that they can migrate somewhere else if they don’t like the rules that are being imposed.

The question about competition to me feels like it’s at the heart of a lot of the controversy, without ever being at the forefront. Over the weekend, Apple, Google, AWS, Okta, and Twilio all decided they weren’t going to work with Parler anymore because it didn’t have the necessary content moderation standards. I think Amazon made public a letter they’d sent to Parler saying, “We’ve identified 98 instances where you should have moderated this harder, and you’re out of our terms of service. We’re not going to let incitement of violence happen through AWS.” If all of those companies can effectively take Parler off the internet, how can you have a rival company to Twitter with a different content moderation standard? Because it feels like if you want to start a service that has more lax moderation, you will run into AWS saying, “Well, here’s the floor.”

This is why if you go deep enough in the internet’s technical stack, down from consumer-facing services like Facebook or Twitter, to really essential infrastructure, like your ISP, mobile carrier, or access providers, we have net neutrality rules — or we had net neutrality rules — saying those companies do have to be common carriers. They do have to provide their services to everyone. They can’t become discretionary censors or choose what ideas can flow on the internet.

Obviously, we have a big debate in this country about net neutrality, even at that very bottom layer. But the examples that you just listed show that we need to have the same conversation about anyone who might be seen as essential infrastructure. If Cloudflare, for example, is protecting a service from hacking, and when Cloudflare boots you off the service, you effectively can’t be on the internet anymore. We should talk about what the rules should be for Cloudflare. And in that case, their CEO, Matthew Prince, wrote a great op-ed, saying, “I shouldn’t have this power. We should be a democracy, and decide how this happens, and it shouldn’t be that random tech CEOs become the arbiters of what speech can flow on the internet.”

So we are talking about many different places in the stack, and I’ve always been a proponent of net neutrality at the ISP level, where it is very hard for most people to switch. There’s a lot of pricing games, and there’s not a lot of competition. It makes a lot of sense for neutrality to exist there. At the user-facing platform level, the very top of the stack, Twitter, I don’t know that I think Twitter neutrality makes any sense. Google is another great example. There’s an idea that search neutrality is a conceptual thing that you can introduce to Google. What is the spectrum of neutrality for a pipe? I’m not sure if search neutrality is even possible. It sounds great to say. I like saying it. Where do you think the gradations of that spectrum lie?

For a service like Twitter or Facebook, if they were neutral, if they allowed every single thing to be posted, or even every single legal thing the First Amendment allows, they would be cesspools. They would be free speech mosh pits. And like real-world mosh pits, there’s some white guys who would like, it and everybody else would flee. That’s a problem — both because they would become far less useful as sites for civil discourse, but also because the advertisers would go away, the platforms would stop making money, and the audience would leave. They would be effectively useless if they had to carry everything. I think most people, realistically, do want them to kick out the Nazis. They do want them to weed out bullying, porn, pro-anorexia content, and just the tide of garbage that would otherwise inundate the internet.

In the US, it’s conservatives who have been raising this question, but globally, people all across the political spectrum raise it. The question is: are the big platforms such de facto gatekeepers in controlling discourse and access to an audience that they ought to be subject to some other kind of rules? You hinted at it earlier. That’s kind of a competition question. There’s a nexus of competition and speech questions that we are not wrangling with well yet.