YouTube will give music labels a way to take down content that ‘mimics an artist’s unique singing or rapping voice.’ Creators will also be required to label AI-generated content beginning next year.

YouTube will have two sets of content guidelines for AI-generated deepfakes: a very strict set of rules to protect the platform’s music industry partners, and another, looser set for everyone else.

That’s the explicit distinction laid out today in a company blog post, which goes through the platform’s early thinking about moderating AI-generated content. The basics are fairly simple: YouTube will require creators to begin labeling “realistic” AI-generated content when they’re uploading videos, and that the disclosure requirement is especially important for topics like elections or ongoing conflicts.

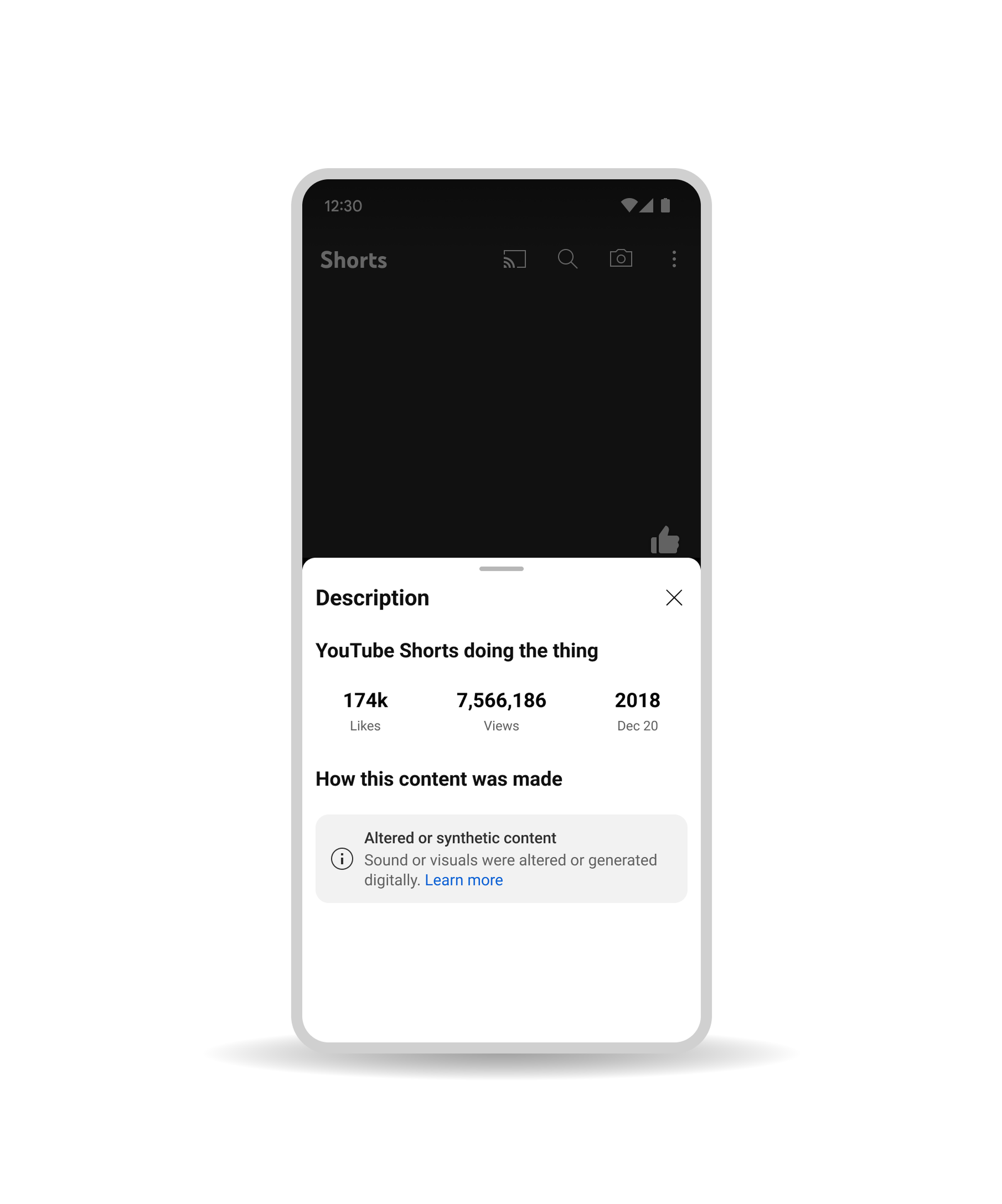

The labels will appear in video descriptions, and on top of the videos themselves for sensitive material. There is no specific definition of what YouTube thinks “realistic” means yet; YouTube spokesperson Jack Malon tells us that the company will provide more detailed guidance with examples when the disclosure requirement rolls out next year.

YouTube says the penalties for not labeling AI-generated content accurately will vary, but could include takedowns and demonetization. But it’s not clear how YouTube will know if an unlabeled video was actually generated by AI — YouTube’s Malon says the platform is “investing in the tools to help us detect and accurately determine if creators have fulfilled their disclosure requirements when it comes to synthetic or altered content,” but those tools don’t exist yet and the ones that do have notoriously poor track records.

From there, it gets more complicated — vastly more complicated. YouTube will allow people to request removal of videos that “simulate an identifiable individual, including their face or voice” using the existing privacy request form. So if you get deepfaked, there’s a process to follow that may result in that video coming down — but the company says it will “evaluate a variety of factors when evaluating these requests,” including whether the content is parody or satire and whether the individual is a public official or “well-known individual.”

If that sounds vaguely familiar, it’s because those are the same sorts of analyses courts do: parody and satire is an important element of the fair use defense in copyright infringement cases, and assessing whether someone is a public figure is an important part of defamation law. But since there’s no specific federal law regulating AI deepfakes, YouTube is making up its own rules to get ahead of the curve — rules which the platform will be able to enforce any way it wants, with no particular transparency or consistency required, and which will sit right alongside the normal creator dramas around fair use and copyright law.

It is going to be wildly complicated — there’s no definition of “parody and satire” for deepfake videos yet, but Malon again said there would be guidance and examples when the policy rolls out next year.

Making things even more complex, there will be no exceptions for things like parody and satire when it comes to AI-generated music content from YouTube’s partners “that mimics an artist’s unique singing or rapping voice,” meaning Frank Sinatra singing The Killers’ Mr. Brightside is likely in for an uphill battle if Universal Music Group decides it doesn’t like it.

There are entire channels dedicated to churning out AI covers by artists living and dead, and under YouTube’s new rules, most would be subject to takedowns by the labels. The only exception YouTube offers in its blog post is if the content is “the subject of news reporting, analysis or critique of the synthetic vocals” — another echo of a standard fair use defense without any specific guidelines yet. YouTube has long been a generally hostile environment for music analysis and critique because of overzealous copyright enforcement, so we’ll have to see if the labels can show any restraint at all — and if YouTube actually pushes back.

This special protection for singing and rapping voices won’t be a part of YouTube’s automated Content ID system when it rolls out next year; Malon tells us that “music removal requests will be made via a form” that partner labels will have to fill out manually. And the platform isn’t going to penalize creators who trip over these blurred lines, at least not in these early days — Malon says “content removed for either a privacy request or a synthetic vocals request will not result in penalties for the uploader.”

YouTube is walking quite a tightrope here, as there is no established legal framework for copyright law in the generative AI era — there’s no specific law or court case that says it’s illegal to train an AI system to sing in Taylor Swift’s voice. But YouTube is also existentially dependent on the music industry — it needs licenses for all the music that floods the platform daily, and especially to compete with TikTok, which has emerged as the most powerful music discovery tool on the internet. There’s a reason YouTube and Universal Music noisily announced a deal to work on AI soon after Ghostwriter99 posted “Heart on my Sleeve” with the AI-generated voices of Drake and The Weeknd — YouTube has to keep these partners happy, even if that means literally taking the law into its own hands.

At the same time, YouTube parent company Google is pushing ahead on scraping the entire internet to power its own AI ambitions — resulting in a company that is at once writing special rules for the music industry while telling everyone else that their work will be taken for free. The tension is only going to keep building — and at some point, someone is going to ask Google why the music industry is so special.