Who would have thought that typing into a chat window, on your computer, would be 2023’s hottest innovation?

It’s pretty obvious that nobody saw ChatGPT coming. Not even OpenAI. Before it became by some measures the fastest growing consumer app in history, before it turned the phrase “generative pre-trained transformers” into common vernacular, before every company you can think of was racing to adopt its underlying model, ChatGPT launched in November as a “research preview.”

The blog post announcing ChatGPT is now a hilarious case study in underselling. “ChatGPT is a sibling model to InstructGPT, which is trained to follow an instruction in a prompt and provide a detailed response. We are excited to introduce ChatGPT to get users’ feedback and learn about its strengths and weaknesses.” That’s it! That’s the whole pitch! No waxing poetic about fundamentally changing the nature of our interactions with technology, not even, like, a line about how cool it is. It was just a research preview.

But now, barely four months later, it looks like ChatGPT really is going to change the way we think about technology. Or, maybe more accurately, change it back. Because the way we’re going, the future of technology is not whiz-bang interfaces or the metaverse. It’s “typing commands into a text box on your computer.” The command line is back — it’s just a whole lot smarter now.

Really, generative AI is headed in two simultaneous directions. The first is much more infrastructural, adding new tools and capabilities to the stuff you already use. Large language models like GPT-4 and Google’s LaMDA are going to help you write emails and memos; they’re going to automatically spruce up your slide decks and correct any mistakes in your spreadsheets; they’re going to edit your photos better than you can; they’re going to help you write code and in many cases just do it for you.

This is roughly the path AI has been on for years, right? Google has been integrating all kinds of AI into its products over the last few years, and even companies like Salesforce have built strong AI research projects. These models are expensive to create, expensive to train, expensive to query, and potentially game-changing for corporate productivity. AI enhancements in products you already use is a big business — or, at least, is being invested in like one — and will be for a long time.

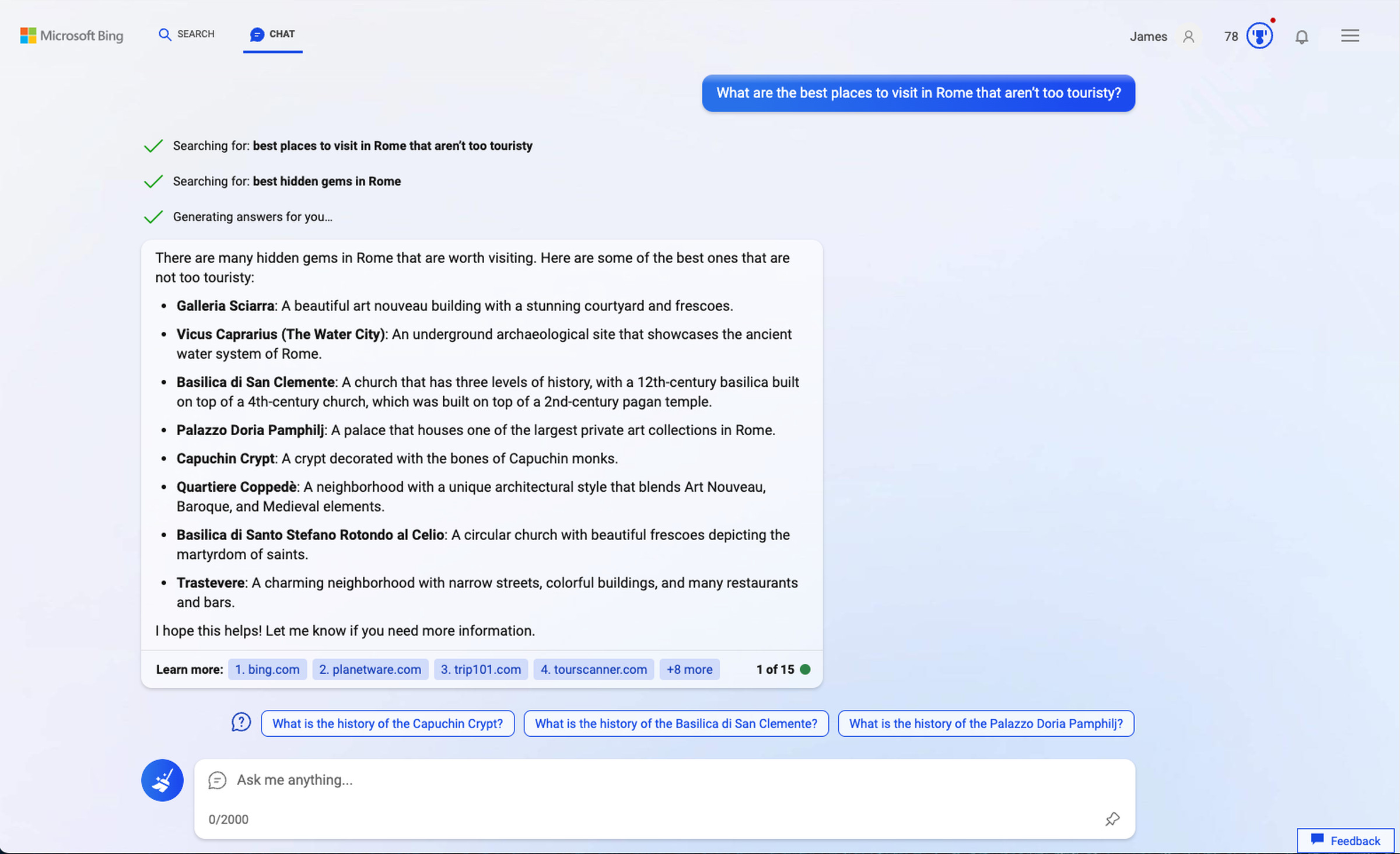

The other AI direction, the one where interacting with the AI becomes a consumer product, was a much less obvious development. It makes sense now, of course: who doesn’t want to talk to a robot that knows all about movies and recipes and what to do in Tokyo, and if I say just the right things might go totally off the rails and try to make out with you? But before ChatGPT took the world by storm, and before Bing and Bard both took the idea and tried to build their own products out of it, I certainly wouldn’t have bet that typing into a chat window would be the next big thing in user interfaces.

In a way, this is a return to a very old idea. For many years, most users only interacted with computers by typing on a blank screen — the command line was how you told the machine what to do. (Yes, ChatGPT is a lot of machines, and they’re not right there on your desk, but you get the idea.)

But then, a funny thing happened: we invented better interfaces! The trouble with the command line was that you needed to know exactly what to type and in which order to get the computer to behave. Pointing and clicking on big icons was much simpler, plus it was much easier to teach people what the computer could do through pictures and icons. The command line gave way to the graphical user interface, and the GUI still reigns supreme.

Developers never stopped trying to make chat UI work, though. WhatsApp is a good example: the company has spent years trying to figure out how users can use chat to interact with businesses. Allo, one of Google’s many failed messaging apps, hoped you might interact with an AI assistant inside chats with your friends. The first round of chatbot hype, circa about 2016, had a lot of very smart people thinking that messaging apps were the future of everything.

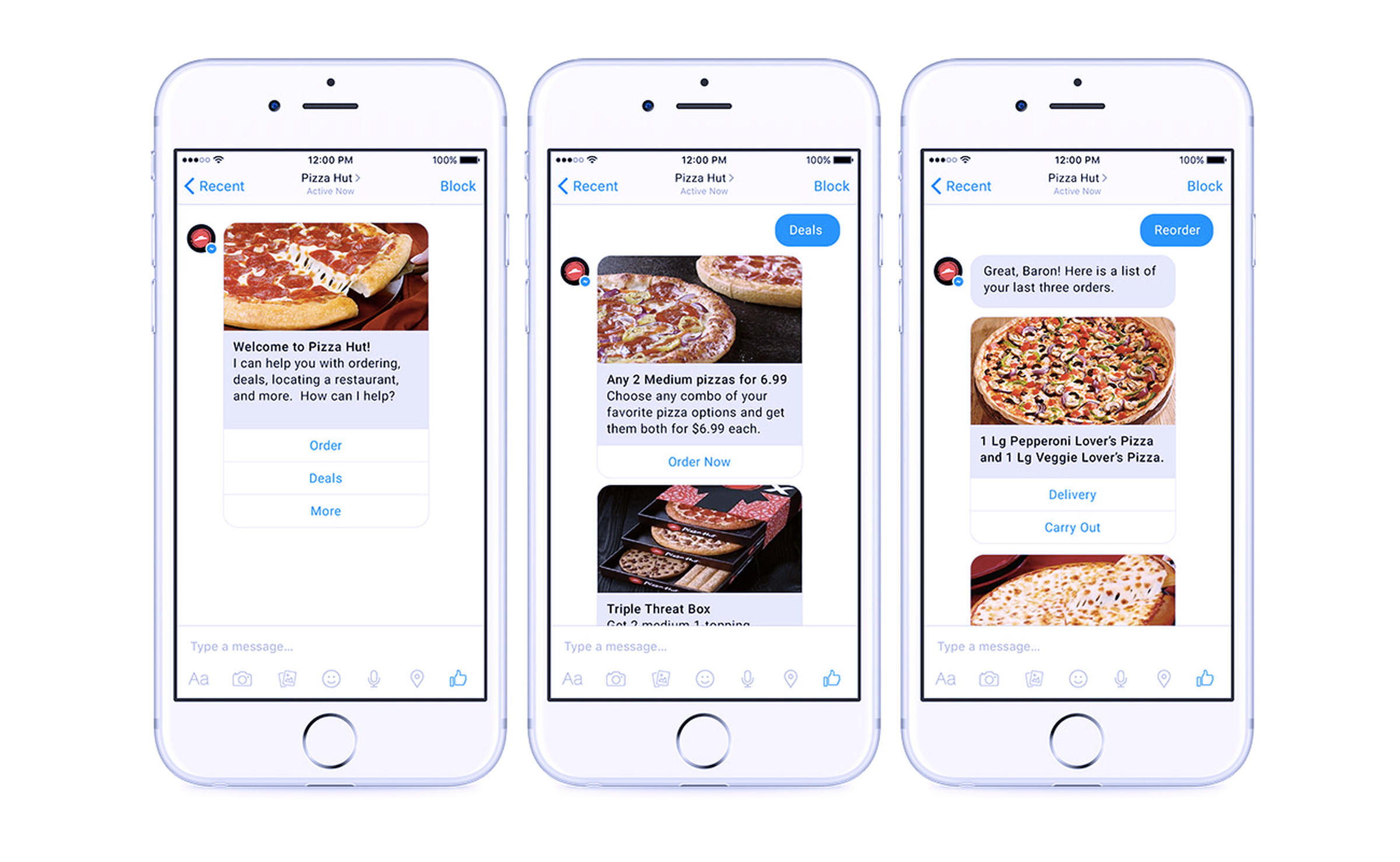

There’s just something alluring about the messaging interface, the “conversational AI.” It starts with the fact that we all know how to use it; messaging apps are how we keep in touch with the people we care about most, which means they’re a place we spend a lot of time and energy. You may not know how to navigate the recesses of the Uber app or how to find your frequent flier number in the Southwest app, but “text these words to this number” is a behavior almost anyone understands. In a market where people don’t want to download apps and mobile websites mostly still suck, messaging can simplify experiences in a big way.

Also, while messaging isn’t the most advanced interface, it might be the most expandable. Take Slack, for instance: you probably think of it as a chat app, but in that back-and-forth interface, you can embed links, editable documents, interactive polls, informational bots, and so much more. WeChat is famously an entire platform — basically an entire internet — smushed into a messaging app. You can start with messaging and go a lot of places.

But so many of these tools stumble in the same ways. For quick exchanges of information, like business hours, chat is perfect — ask a question, get an answer. But browsing a catalog as a series of messages? No thanks. Buying a plane ticket with a thousand-message back-and-forth? Hard pass. It’s no different than voice assistants, and god help you if you’ve ever tried to even buy simple things with Alexa. (“For Charmin, say ‘three.’”) For most complicated things, a visual and dedicated UI is far better than a messaging window.

And when it comes to ChatGPT, Bard, Bing, and the rest, things get complicated really fast. These models are smart and collaborative, but you still have to know exactly what to ask for, in what way, and in what order to get what you want. The idea of a “prompt engineer,” the person you pay to know exactly how to coax the perfect image from Stable Diffusion or get ChatGPT to generate just the right Javascript, seems ridiculous but is actually an utterly necessary part of the equation. It’s no different than in the early computer era when only a few people knew how to tell the computer what to do. There are already marketplaces on which you can buy and sell really great prompts; there are prompt gurus and books about prompts; I assume Stanford is already working on a Prompt Engineering major that everyone will be taking soon.

The remarkable thing about generative AI is that it feels like it can do almost anything. That’s also the whole problem. When you can do anything, what do you do? Where do you start? How do you learn how to use it when your only window into its possibilities is a blinking cursor? Eventually, these companies might develop more visual, more interactive tools that help people truly understand what they can do and how it all works. (This is one reason to keep an eye on ChatGPT’s new plug-ins system, which is pretty straightforward for now but could quickly expand the things you can do in the chat window.) Right now, the best idea any of them have is to offer a few suggestions about things you might type.

AI was going to be a feature. Now it’s the product. And that means the text box is back. Messaging is the interface, again.