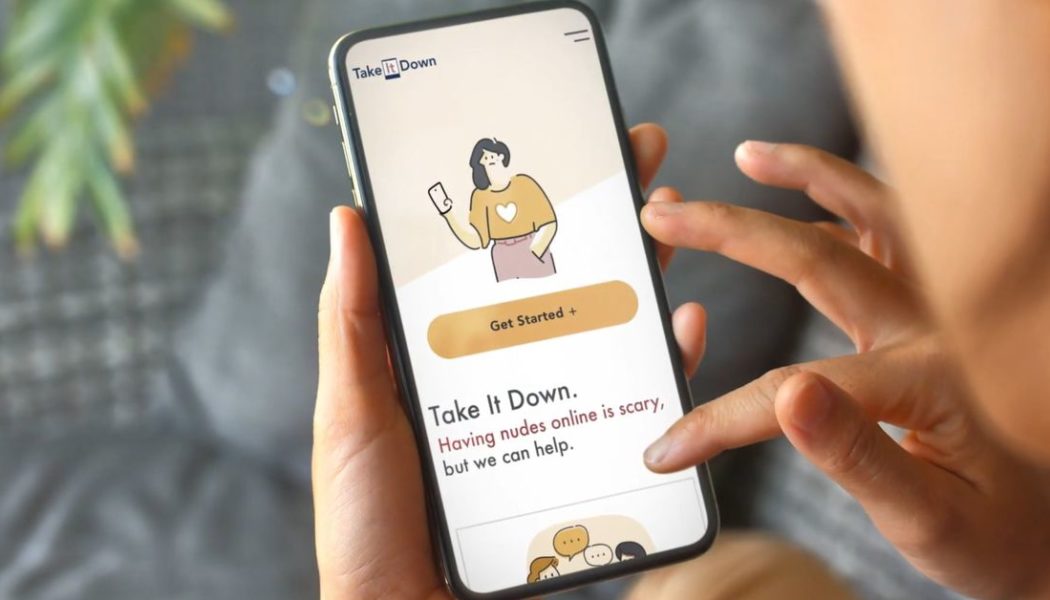

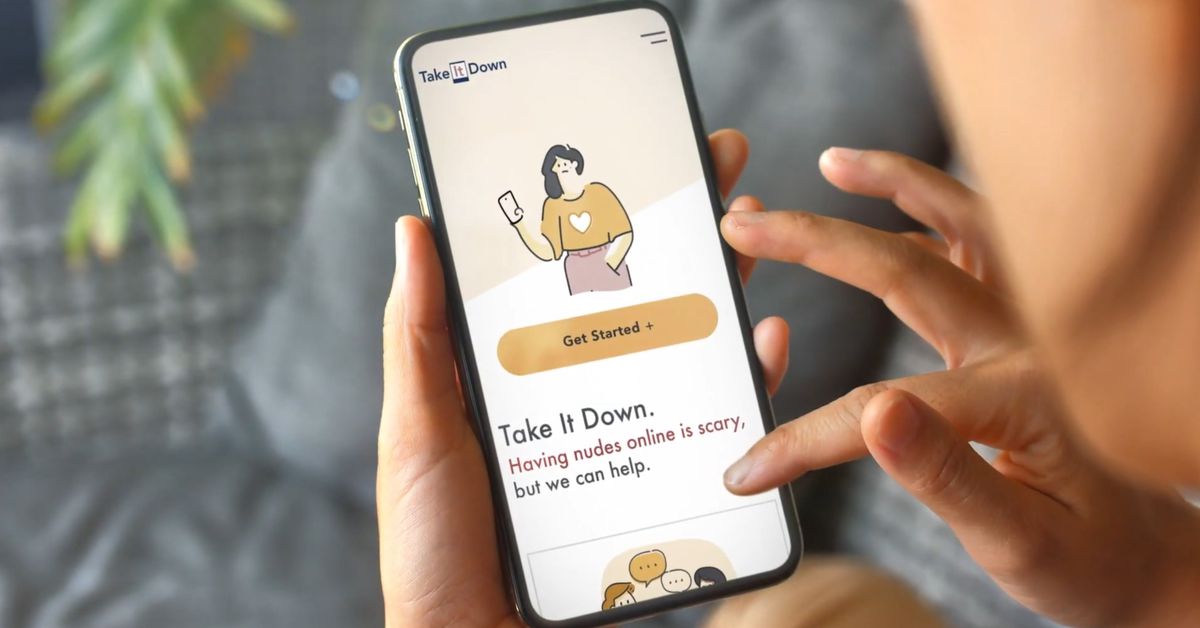

‘Take It Down’ makes it easier for children and young adults to remove exploitative and sexualized content from participating platforms.

The National Center for Missing and Exploited Children (NCMEC) has announced a new platform designed to help remove sexually explicit images of minors from the internet. Meta revealed in a blog post that it had provided initial funding to create the NCMEC’s free-to-use “Take It Down” tool, which allows users to anonymously report and remove “nude, partially nude, or sexually explicit images or videos” of underage individuals found on participating platforms and block the offending content from being shared again.

Facebook and Instagram have signed on to integrate the platform, as have OnlyFans, Pornhub, and Yubo. Take It Down is designed for minors to self-report images and videos of themselves; however, adults who appeared in such content when they were under the age of 18 can also use the service to report and remove it. Parents or other trusted adults can make a report on behalf of a child, too.

An FAQ for Take It Down states that users must have the reported image or video on their device to use the service. This content isn’t submitted as part of the reporting process and, as such, remains private. Instead, the content is used to generate a hash value, a unique digital fingerprint assigned to each image and video that can then be provided to participating platforms to detect and remove it across their websites and apps, while minimizing the number of people who see the actual content.

“We created this system because many children are facing these desperate situations,” said Michelle DeLaune, president and CEO of NCMEC. “Our hope is that children become aware of this service, and they feel a sense of relief that tools exist to help take the images down. NCMEC is here to help.”

The Take It Down service is comparable to StopNCII, a service launched in 2021 that aims to prevent the nonconsensual sharing of images for those over the age of 18. StopNCII similarly uses hash values to detect and remove explicit content across Facebook, Instagram, TikTok, and Bumble.

In addition to announcing its collaboration with NCMEC in November last year, Meta rolled out new privacy features for Instagram and Facebook that aim to protect minors using the platforms. These include prompting teens to report accounts after they block suspicious adults, removing the message button on teens’ Instagram accounts when they’re viewed by adults with a history of being blocked, and applying stricter privacy settings by default for Facebook users under 16 (or 18 in certain countries).

Other platforms participating in the program have taken steps to prevent and remove explicit content depicting minors. Yubo, a French social networking app, has deployed a range of AI and human-operated moderation tools that can detect sexual material depicting minors, while Pornhub allows individuals to directly issue a takedown request for illegal or nonconsensual content published on its platform.

All five of the participating platforms have been previously criticized for failing to protect minors from sexual exploitation. A BBC News report from 2021 found children could easily bypass OnlyFans’ age verification systems, while Pornhub was sued by 34 victims of sexual exploitation the same year, alleging that the site knowingly profited from videos depicting rape, child sexual exploitation, trafficking, and other nonconsensual sexual content. Yubo — described as “Tinder for teens” — has been used by predators to contact and rape underage users, and the NCMEC estimated last year that Meta’s plan to apply end-to-end encryption to its platforms could effectively conceal 70 percent of the child sexual abuse material currently detected and reported on its platform.

“When tech companies implement end-to-end encryption, with no preventive measures built in to detect known child sexual abuse material, the impact on child safety is devastating,” said DeLaune to the Senate Judiciary Committee earlier this month.

A press release for Take It Down mentions that participating platforms can use the provided hash values to detect and remove images across “public or unencrypted sites and apps,” but it isn’t clear if this extends to Meta’s use of end-to-end encryption across services like Messenger. We have reached out to Meta for confirmation and will update this story should we hear back.