/cdn.vox-cdn.com/uploads/chorus_asset/file/10817645/acastro_180510_1777_siri_0002.jpg)

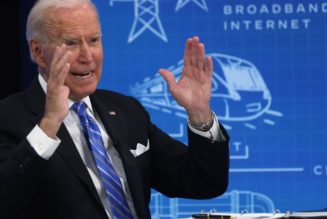

With this proof-of-concept, a developer shows how GPT-3 can be used to respond to queries and control HomeKit devices via Siri.

A developer has put together a GPT-3 demo that attempts to enhance Apple’s Siri voice assistant and allow far more conversational voice commands. In a video posted to Reddit, developer Mate Marschalko shows the assistant controlling his HomeKit smart home devices and responding to queries in response to relatively vague prompts that today’s voice assistants like Siri would typically struggle to understand.

For example, the AI is shown turning on Marschalko’s lights in response to the voice prompt “Just noticed that I’m recording this video in the dark in the office, can you do something about that?” Later on in the video Marschalko asks the assistant to set his bedroom to a temperature that would “help me sleep better,” and it responds by setting his bedroom thermostat to 19 degrees celsius.

In an accompanying blog post, Marschalko explains how the demo works. Essentially, he’s using Apple’s Shortcuts app to interface between Siri, GPT-3, and his HomeKit-enabled smart home devices. A voice command to Siri causes Shortcuts to send a lengthy prompt to the AI service requesting a response in a machine-readable format. Once it gets a response, Shortcuts parses it to control smart home devices and/or respond via Siri. The blog post is worth reading in its entirely for a fuller explanation.

It’s an intriguing demo, and shows what voice assistants could be capable of by integrating this new generation of AI technology, but it’s not perfect. For starters, Marschalko says that each command costs $0.014 per API request sent to GPT-3. The video is also edited, so it’s unclear if the demo works 100 percent of the time or if responses are being shown selectively. A more intelligent backend also won’t do anything to help if the voice recognition software can’t understand what words a user is saying in the first place.

The demo raises larger questions about how we want voice assistants to work. Do you really want to have a conversation with a voice assistant, or do you just want to be able to bark short and simple commands to control it like a robot? Marschalko himself also raises the risk of the assistant saying “unexpected things” in a follow up Reddit comment:

Nevertheless, the demo still offers fascinating hints of what sort of voice assistant interactions might soon be possible if the likes of Apple, Amazon, and Google integrate this technology into their services. And it’s impressive that these tools are open and approachable enough that a relatively slick demo like this can be hacked together using widely available consumer software.