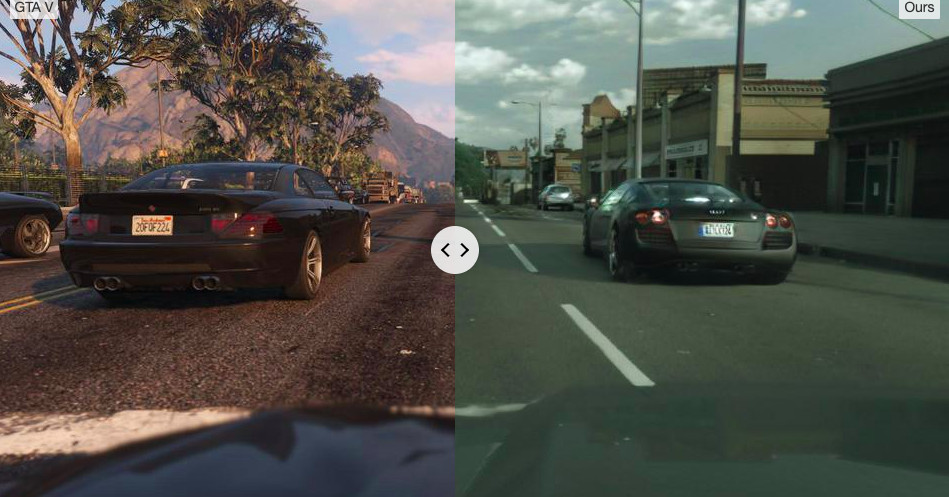

One of the more impressive aspects of Grand Theft Auto V is how closely the game’s San Andreas approximates real-life Los Angeles and Southern California, but a new machine learning project from Intel Labs called “Enhancing Photorealism Enhancement” might take that realism in a unsettlingly photorealistic direction (via Gizmodo).

Putting the game through the processes researchers Stephan R. Richter, Hassan Abu Alhaija, and Vladlen Kolten created produces a surprising result: a visual look that has unmistakable similarities to the kinds of photos you might casually take through the smudged front window of your car. You have to see it in motion to really appreciate it, but the combination of slightly washed-out lighting, smoother pavement, and believably reflective cars just sells the fact you’re looking out at the real street from a real dashboard, even if it’s all virtual.

[embedded content]

The Intel researchers suggest some of that photorealism comes from the datasets they fed their neural network. The group offers a more in-depth and thorough explanation for how image enhancement actually works in their paper (PDF), but as I understand it, the Cityscapes Dataset that was used — built largely from photographs of German streets — filled in a lot of the detail. It’s dimmer and from a different angle, but it almost captures what I imagine a smoother, more interactive version of scrolling through Google Maps’ Street View could be like. It doesn’t entirely behave like it’s real, but it looks very much like it’s built from real things.

The researchers say their enhancements go beyond what other photorealistic conversion processes are capable of by also integrating geometric information from GTA V itself. Those “G-buffers,” as the researchers call them, can include data like the distance between objects in the game and the camera, and the quality of textures, like the glossiness of cars.

While you might not see an official “photorealism update” roll out to GTA V tomorrow, you may have already played a game or watched a video that’s benefited from another kind of machine learning — AI upscaling. The process of using machine learning smarts to blow up graphics to higher resolutions doesn’t show up everywhere, but has been featured in Nvidia’s Shield TV and in several different mod projects focused on upgrading the graphics of older games. In those cases a neural network is making predictions to fill in missing pixels of detail from a lower resolution game, movie, or TV show to reach those higher resolutions.

Photorealism probably shouldn’t be the only graphical goal for video games to have (artistry aside, it looks kind of creepy), but this Intel Labs project does show there’s probably as much room to grow on the software side of things as there is in the raw GPU power of new consoles and gaming PCs.